California updates AI guidance for safe and effective use in public schools

The California Department of Education shared the updated guidance on LinkedIn, outlining expectations for AI use across TK–12 classrooms.

California Department of Education (CDE) has released updated guidance on the safe and effective use of artificial intelligence in California public schools, according to a post shared on LinkedIn.

The guidance, which applies to transitional kindergarten through grade 12, updates recommendations first issued in 2023 and is intended to support schools as AI tools become more common in teaching, assessment, and administration.

The California Department of Education oversees public education across the state and provides guidance, resources, and oversight for local educational agencies. The updated document is advisory rather than mandatory, but sits alongside existing legal requirements under federal and state law.

Guidance focuses on professional learning and classroom use

In the LinkedIn post, the Department said the updated guidance aligns with State Superintendent of Public Instruction Tony Thurmond’s professional learning initiative and is designed to help educators understand both the benefits and limitations of AI. CDE stated, “The CDE has released new artificial intelligence guidance for the safe and effective use of AI in California schools.”

It added that the Department is “committed to supporting AI-focused professional learning for administrators and educators, educating them about the benefits and limitations of AI.”

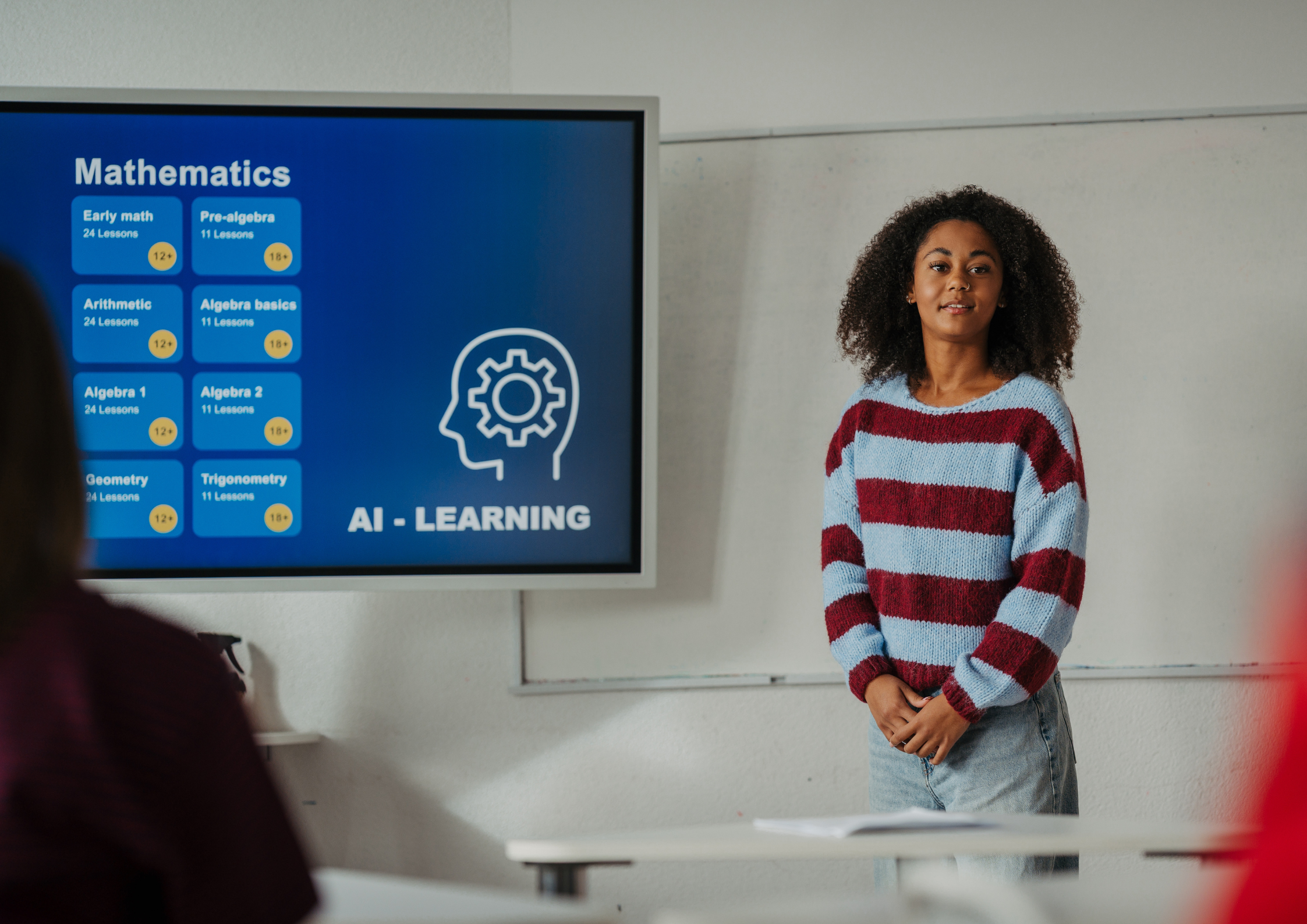

The guidance stresses that AI should support, rather than replace, relationships between educators and students. It positions human judgment, connection, and care as essential elements of learning, even as AI tools are introduced into classrooms.

The document notes that while AI can expand personalization and accessibility, schools must remain attentive to equity, bias, and access, particularly for students in underresourced communities.

Updated guidance tightens focus on data privacy and academic integrity

In the updated guidance, CDE places greater emphasis on data privacy and legal compliance as AI tools become more embedded in classroom practice. While the earlier 2023 guidance flagged privacy as a concern, the revised document goes further in setting out practical considerations for schools evaluating AI systems.

The update calls on schools and districts to more closely scrutinize how AI tools collect, store, and retain student data, including whether data entered into AI systems is used to further train models or is retained beyond immediate instructional use. It highlights the need for clearer understanding of vendor data practices, data ownership, deletion policies, and access controls, reinforcing that compliance with FERPA and COPPA remains mandatory regardless of whether AI tools are labeled as instructional, administrative, or experimental.

The revised guidance also strengthens its position on transparency, urging schools to seek greater clarity on how AI systems generate outputs and how automated decisions may affect students, particularly in assessment and feedback contexts.

Academic integrity receives expanded treatment in the update, with CDE explicitly discouraging blanket bans on AI use. Instead, the guidance encourages schools to define acceptable and unacceptable uses of AI more precisely and to revisit existing academic integrity policies in light of generative tools.

The update places increased emphasis on involving students in developing ethical use expectations, positioning this as a way to support responsible use, media literacy, and understanding of AI limitations, rather than relying solely on detection or enforcement mechanisms.

Greater scrutiny of classroom AI tools and their impact

The updated guidance also expands its discussion of the types of AI tools already in use across TK–12 schools, including adaptive learning platforms, intelligent tutoring systems, automated grading and feedback tools, classroom chatbots, immersive simulations, and AI-supported assessment models.

Compared with earlier versions, the update places stronger emphasis on the risks associated with these tools when used without adequate oversight. CDE cautions that AI-generated outputs should not be treated as neutral, objective, or authoritative, particularly where they influence grading, grouping, instructional pathways, or student evaluation.

Schools are encouraged to implement processes for ongoing evaluation of AI tools, including monitoring for inaccuracies, bias, and unintended consequences. The guidance also highlights the importance of human review, particularly in high-stakes contexts, reinforcing that AI should support professional judgment rather than replace it.

Equity and workforce preparation reinforced as core priorities

The updated guidance more explicitly connects AI literacy to workforce readiness and long-term economic trends, referencing growing demand for AI and data skills across industries. While earlier guidance emphasized awareness and experimentation, the revised document places stronger emphasis on ensuring students understand how AI systems function, where they can fail, and how they shape decisions.

CDE frames this as both an economic and equity issue, warning that uneven access to AI learning opportunities could widen existing digital divides. The update underscores the need for intentional support for schools serving rural, urban, and underresourced communities, where access to safe, well-supported AI tools and professional learning may be more limited.

The guidance positions equitable access to AI education as essential if schools are to prepare students not just to use AI tools, but to critically engage with them as informed participants in an AI-driven society.

Updated guidance remains non-prescriptive

The Department emphasized that the document is not a mandate and does not override existing law. Instead, it is intended to support local decision-making as schools navigate AI adoption.

CDE said the guidance “is meant to provide helpful guidance to our partners in education and is in no way required to be followed.”

ETIH Innovation Awards 2026

The ETIH Innovation Awards 2026 are now open and recognize education technology organizations delivering measurable impact across K–12, higher education, and lifelong learning. The awards are open to entries from the UK, the Americas, and internationally, with submissions assessed on evidence of outcomes and real-world application.