NASA lets AI plan Mars rover routes for the first time using Anthropic’s Claude

NASA’s Jet Propulsion Laboratory has demonstrated generative AI route planning on another planet, with Anthropic’s Claude creating navigation waypoints for the Perseverance rover, pointing to new models of autonomy that could reshape how complex systems are operated at extreme distance.

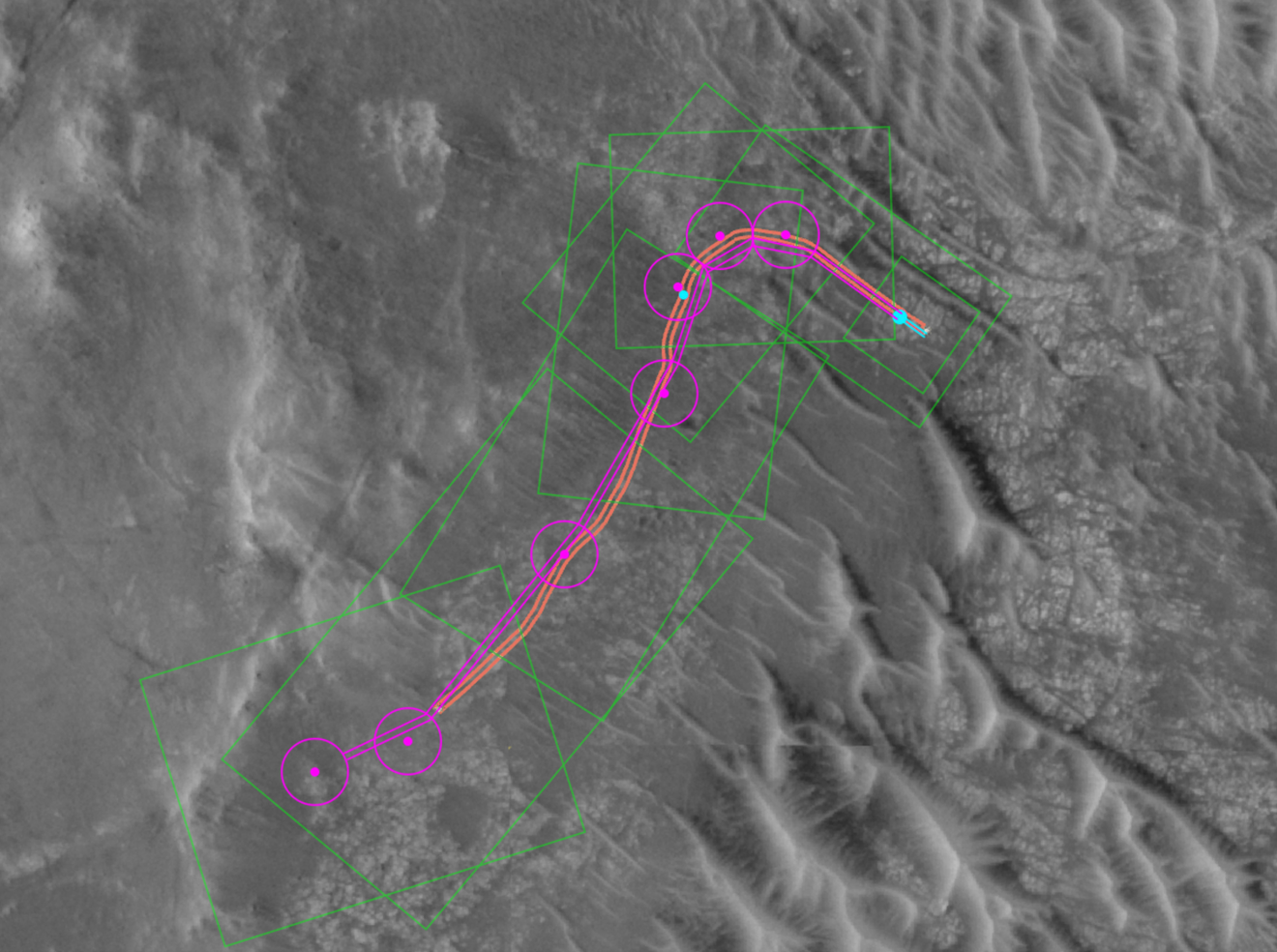

This annotated orbital image shows the route planned by AI, highlighted in magenta, alongside the path actually taken by NASA’s Perseverance Mars rover, shown in orange, during its December ten, 2025 drive at Jezero Crater. The journey formed part of the second demonstration assessing how generative AI can be used in rover route planning. Photo credit: NASA/JPL-Caltech/University of Arizona

NASA’s Jet Propulsion Laboratory has completed the first rover drives on another world planned by artificial intelligence, marking a shift in how mission-critical navigation decisions can be made beyond Earth.

On December eight and ten, 2025, the Perseverance rover executed two drives in Jezero Crater using waypoints generated by Anthropic’s Claude AI models rather than human route planners, a task that has traditionally required large teams on Earth and multiple planning cycles.

The demonstration matters because Mars operations are constrained by distance, with communication delays that prevent real-time control. By delegating complex planning tasks to AI systems trained on the same data used by experts, NASA is testing how autonomy can reduce workload, increase operational tempo, and support longer, more ambitious missions where human oversight is limited.

How Claude was used to plan rover routes

The AI-planned drives were led from JPL’s Rover Operations Center and carried out by NASA’s Perseverance rover on mission sols 1707 and 1709. Engineers used vision-language models to analyze high-resolution orbital imagery from the HiRISE camera aboard the Mars Reconnaissance Orbiter, alongside terrain and slope data derived from digital elevation models.

Working with Anthropic, the JPL team used the company’s Claude models to generate navigation waypoints, fixed locations that determine where the rover stops to receive its next set of instructions. Claude analyzed the same imagery and datasets used by human planners to identify hazards such as bedrock, boulder fields, and sand ripples, then produced a continuous route made up of short segments that could be safely executed by the rover.

On December eight, Perseverance drove 689 feet, or 210 meters, using AI-generated waypoints. Two days later, it completed a second drive of 807 feet, or 246 meters. In both cases, the routes were reviewed and validated before transmission to Mars.

Digital twin verification and human oversight

To ensure compatibility with the rover’s flight software, all AI-generated commands were processed through JPL’s digital twin, a virtual replica of Perseverance used to simulate rover behavior. Engineers verified more than 500,000 telemetry variables to confirm that the planned paths would not place the rover at risk before the commands were sent via NASA’s Deep Space Network.

This validation step reflects how AI is being introduced into real operations cautiously, with human review and established safety checks remaining central. According to JPL, only minor adjustments were required after review, primarily informed by ground-level imagery that the AI had not analyzed.

NASA Administrator Jared Isaacman comments, “This demonstration shows how far our capabilities have advanced and broadens how we will explore other worlds. Autonomous technologies like this can help missions to operate more efficiently, respond to challenging terrain, and increase science return as distance from Earth grows. It’s a strong example of teams applying new technology carefully and responsibly in real operations.”

Implications for autonomy beyond Mars

The Perseverance test highlights how generative AI could change operational models for space exploration, where human planners currently spend significant time manually designing routes limited to short distances to reduce risk. By automating parts of this process, NASA is exploring how rovers could eventually handle kilometer-scale drives with less direct intervention.

Vandi Verma, space roboticist at JPL and a member of the Perseverance engineering team, says, “The fundamental elements of generative AI are showing a lot of promise in streamlining the pillars of autonomous navigation for off-planet driving: perception (seeing the rocks and ripples), localization (knowing where we are), and planning and control (deciding and executing the safest path). We are moving towards a day where generative AI and other smart tools will help our surface rovers handle kilometer-scale drives while minimizing operator workload, and flag interesting surface features for our science team by scouring huge volumes of rover images.”

Matt Wallace, manager of JPL’s Exploration Systems Office, adds, “That is the game-changing technology we need to establish the infrastructure and systems required for a permanent human presence on the Moon and take the U.S. to Mars and beyond.”

While the test was limited in scope, it signals broader implications beyond planetary science. For EdTech and AI skills development, it offers a real-world example of vision-language models being applied to high-stakes decision-making, systems verification, and human-AI collaboration, areas increasingly relevant to advanced technical education and workforce training.

ETIH Innovation Awards 2026

The ETIH Innovation Awards 2026 are now open and recognize education technology organizations delivering measurable impact across K–12, higher education, and lifelong learning. The awards are open to entries from the UK, the Americas, and internationally, with submissions assessed on evidence of outcomes and real-world application.