BETT 2026: Turnitin warns assessment models must evolve as AI use accelerates

Turnitin used a BETT 2026 session to tackle a question that has become unavoidable for universities and schools: how much AI use is acceptable before assessment stops measuring learning.

Speaking in a talk titled How much is too much?, Turnitin Chief Product Officer Annie Cacatelli avoided offering a simple threshold, instead describing a fast-moving landscape where policy, pedagogy, and technology are still catching up with student behavior.

“If you’re waiting for me to give you an answer, you’re going to be disappointed,” Cacatelli told the audience. “Because the answer is somewhere between ‘I don’t know’ and ‘it depends.’”

AI arrived “overnight” and education is still adjusting

Cacatelli framed generative AI as fundamentally different from earlier shifts in education technology because of the speed of adoption and the lack of preparation time for institutions.

“Generative AI was introduced suddenly,” she said. “I always know the date, November 30th, 2022. It came suddenly.” She noted that education systems usually have time to observe patterns and adjust practice, something that did not happen with generative AI. “Usually you’ve got a little bit of time to adjust, to learn it, to see patterns,” she explained. “That didn’t happen here. It happened overnight.”

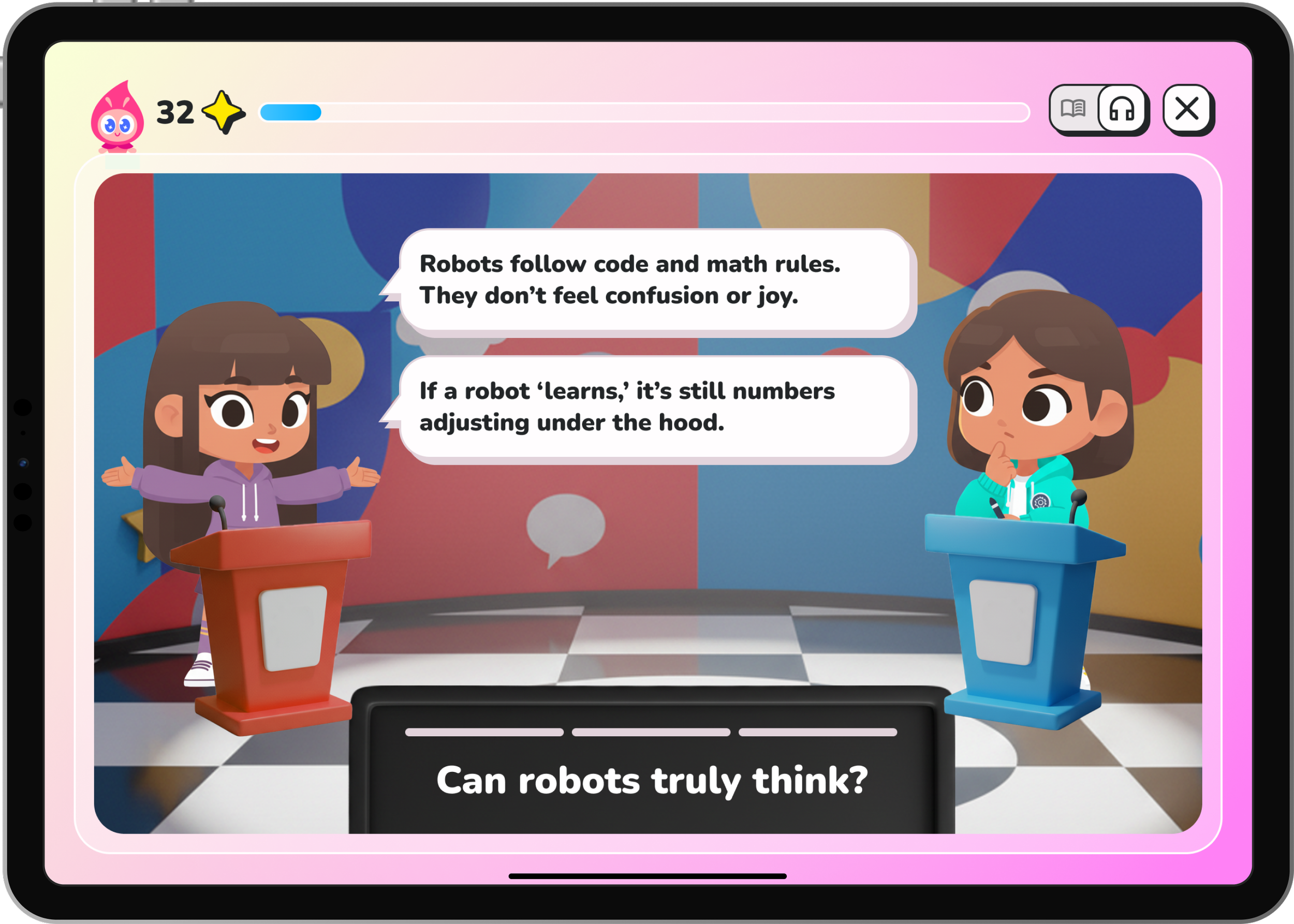

Cacatelli argued that the central challenge now is balancing opportunity with protection of core academic skills. “How do we enhance but not take away from critical thinking?” she asked. “How do we use it for research and writing skills… how do we protect them, but then how do we bolster them?”

She cautioned against expecting rapid clarity. “I’d like to tell you that we’re going to have answers in the next year or two,” she added. “I don’t think that’s the case.”

Instead, she urged collaboration across the sector. “We need to come together as an education community and start talking,” Cacatelli said, “and understand transparently ways that can help and ways that can’t help.”

From plagiarism detection to visibility into process

Cacatelli positioned Turnitin’s current direction within its longer history of responding to academic integrity challenges created by new technology.

“More than 25 years ago, we were founded on a mission to work with educators to provide academic integrity solutions to protect authentic learning,” she said.

She traced that evolution from early internet plagiarism to essay mills and paper reuse. “Teachers were worried students were going to be lifting large chunks of text from the internet without proper citation,” she noted. “Similar problem, different technological threat.”

When generative AI arrived, she acknowledged that institutions initially wanted tools to identify misuse. “When ChatGPT came out in 2022, Turnitin quickly launched an AI detector,” Cacatelli recalled. “Because that’s what we heard back then.”

She was clear, however, that the company’s thinking has shifted. “I reject the word detection,” she stressed. “It’s the wrong concept.”

Instead, she emphasized the need for visibility rather than judgment. “The key here is transparency, not detection,” she said. “They have different motivations.”

Cacatelli warned against treating AI indicators as definitive proof. “Our customers aren’t using our AI detector as judge and jury,” she added. “And they shouldn’t.”

She described the intended role as a conversation starter. “It’s always about starting a conversation with the student,” Cacatelli said. “How did you use it? Was it useful? What made you think that was the appropriate use?”

Research signals concern, but not the full picture

Cacatelli referenced multiple studies to underline why institutions are worried about AI dependence.

According to a 2025 KPMG global AI attitudes study, she said, “more than 75 percent of students have felt that they could not complete their work without the help of AI,” while “four in five students say they put less effort into their studies and assessment, knowing that they can rely on AI.”

“These numbers are alarming,” she acknowledged. “But they’re not the whole story.” She also pointed to early findings from an MIT study on AI and cognitive engagement. “They announced it early,” she explained, “because they were afraid there’s going to be consequences now.”

The findings suggested declining engagement among students using generative AI. “They found that the group who used ChatGPT had lower and lower levels of cognitive engagement,” she said.

Cacatelli also highlighted what she described as a “slippery slope.” “Once they use it once, the next time they use it more,” she warned, “and the next time they outsource their thinking more.”

She cited further survey data showing broader social effects, including that “half of students agree that using AI in class makes them feel less connected to the teacher.” “That relationship between the student and teacher is one of the things that is the intrinsic motivation for students to continue to engage,” she said. “If we start eroding that, that can be dangerous.”

Where teachers see clear benefits

Alongside the risks, Cacatelli highlighted areas where educators report tangible gains. “Sixty-nine percent of teachers said AI tools have improved their teaching methods or skills,” she said. “Fifty-nine percent said AI has enabled more personalised learning. And fifty-five percent said AI has given more time to interact directly with students.”

She described the final figure as critical. “To me, that’s the win,” Cacatelli said. “Winning looks like teachers having more time to interact directly with students.”

She added that teachers are using AI to reduce workload and improve lesson design, while students benefit when AI supports early-stage writing. “It helps with the logistics of writing,” she said. “Organisation, coherence, syntax, grammar.”

Turnitin focuses on how work is created, not just what is submitted

Cacatelli argued that academic integrity now depends on understanding learning over time, not simply evaluating final outputs.

“It’s understanding the student’s process, not just the final product,” she said. “The final product is a useful artefact… but what is the process of learning?”

She acknowledged that determining acceptable AI use within that process remains difficult. “We don’t yet know where in that process it’s okay to offload learning,” she said. “And at what stage that is okay.”

She then outlined Turnitin’s direction, including its Clarity writing environment, which is designed to make writing development visible to instructors. “This is not a sales page,” she told the audience. “I just want to show you what we believe the future of writing looks like.”

She emphasized that teachers often lack insight into how assignments are produced. “The teacher doesn’t see how they’re using it,” Cacatelli said. “And we’re not closing that gap to understand.”

Policy still lagging behind practice

Cacatelli closed by warning that institutional policy is trailing student behavior. “A critical next step is determining each institution’s approach to AI use,” she said.

She acknowledged that blanket rules are unlikely to work. “Policies are going to need to be nuanced,” she said. “But you need guidance, tools, and guardrails.”

She also noted the mixed signals students receive as employers increasingly expect AI fluency. “Students are getting mixed messages,” she observed.

Her advice to institutions was clear. “The number one thing is have these conversations,” Cacatelli said. “Don’t ignore that it’s happening around you.” She ended by reinforcing the need for openness as AI continues to evolve. “We need to work as a community,” Cacatelli concluded, “to have transparent conversations, and work transparently with your students.”

ETIH Innovation Awards 2026

The ETIH Innovation Awards 2026 are now open and recognize education technology organizations delivering measurable impact across K–12, higher education, and lifelong learning. The awards are open to entries from the UK, the Americas, and internationally, with submissions assessed on evidence of outcomes and real-world application.