Anthropic analyzes how university educators use Claude across academic tasks

Photo credit: Anthropic

Anthropic, the AI company behind Claude, has published new education research on how university faculty use its tools.

The report analyzes about 74,000 anonymized Claude.ai conversations from higher education accounts in May and June 2025 and includes interviews and surveys with 22 Northeastern University faculty members. The findings outline where educators lean on AI in and beyond the classroom and where they prefer to stay hands on.

Data sample and scope

Using a privacy-preserving approach, Anthropic filtered conversations linked to higher education email domains and then identified educator-specific tasks such as creating syllabi, grading, or developing course materials. The analysis is a snapshot of profession-specific activity rather than a full picture of all educator usage.

It focuses on postsecondary roles, not K-12, and captures an early-adopter group over an 11-day window. Institutions weighing policy or training will want to note these bounds and the potential for selection bias.

Curriculum development emerges as the top use case, representing 57 percent of the conversations analyzed, followed by academic research at 13 percent and assessing student performance at 7 percent. Faculty also draft recommendation letters, prepare meeting agendas, develop vocational materials, and create administrative documents.

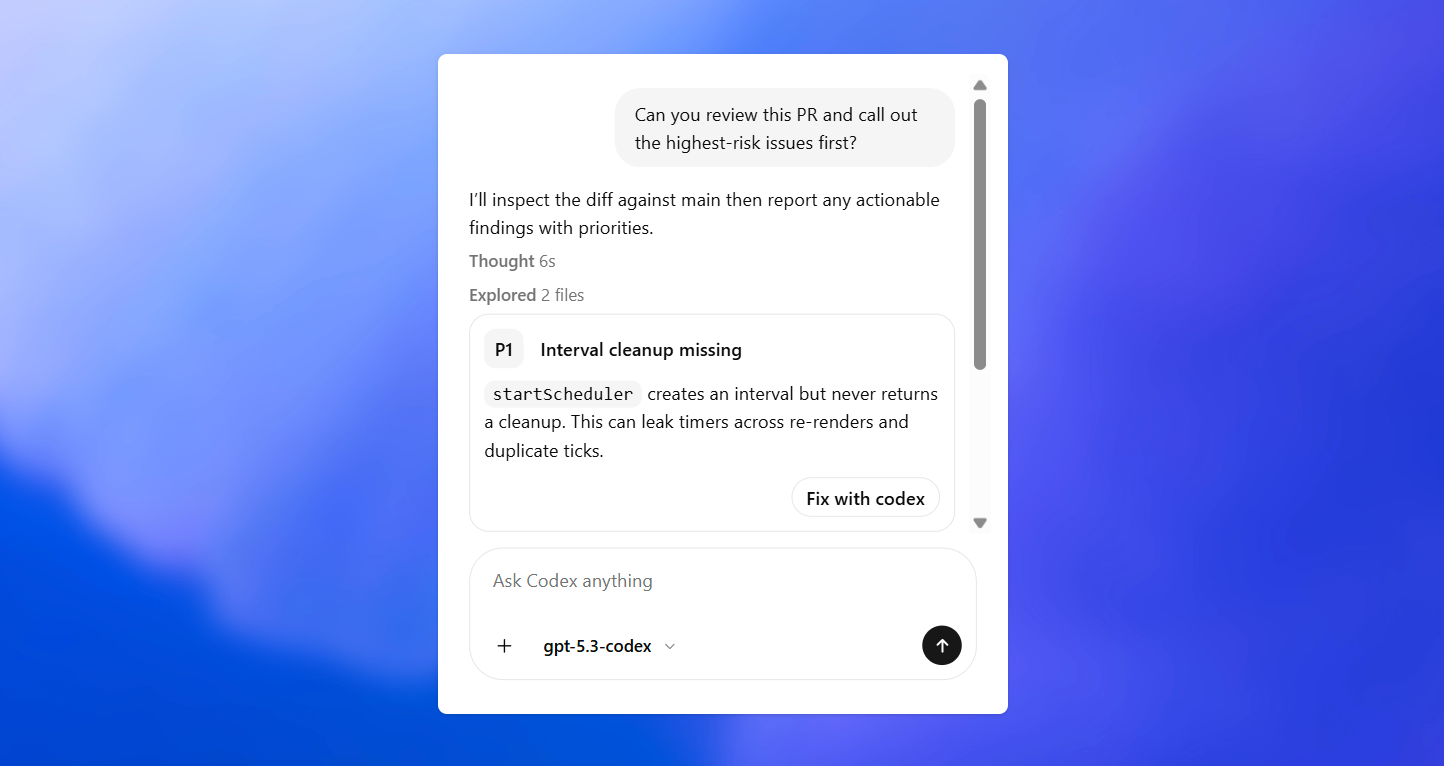

Educators are not only chatting with models. Many build custom materials with Claude Artifacts, including interactive games, automated rubrics, data visualizations, and subject-specific tools. One surveyed Northeastern faculty member says: “What was prohibitively expensive (time) to do [before] now becomes possible. Custom simulation, illustration, interactive experiment. Wow. Much more engaging for students.”

Balancing augmentation and automation

Across tasks, faculty tend to use AI as augmentation for work that needs judgment or context. Teaching and classroom instruction show 77.4 percent augmentation, grant proposals 70.0 percent, academic advising 67.5 percent, and supervising student work 66.9 percent. Routine tasks see more automation.

Managing finances and fundraising shows 65.0 percent automation, maintaining student records and evaluating performance 48.9 percent, and admissions and enrollment 44.7 percent. In grading-related conversations, 48.9 percent are automation-heavy despite faculty rating grading as AI’s least effective application.

Changing approaches to teaching and assessment

Faculty describe course design and expectations changing alongside AI. One professor says: “AI is forcing me to totally change how I teach. I am expending a lot of effort trying to figure out how to deal with the cognitive offloading issue.” Another notes changes in analytics courses: “AI-based coding has completely revolutionized the analytics teaching/learning experience.

“Instead of debugging commas and semicolons, we can spend our time talking about the concepts around the application of analytics in business.” Accuracy checking is an ongoing challenge. As one professor writes, “The challenge is [that] with the amount of AI generation increasing, it becomes increasingly overwhelming for humans to validate and stay on top.”

Views on grading remain split. A Northeastern professor says: “Ethically and practically, I am very wary of using [AI tools] to assess or advise students in any way. Part of that is the accuracy issue. I have tried some experiments where I had an LLM grade papers, and they're simply not good enough for me. And ethically, students are not paying tuition for the LLM’s time, they're paying for my time. It's my moral obligation to do a good job (with the assistance, perhaps, of LLMs).” Others rethink assignments so AI cannot complete them end-to-end.

Another professor explains: “I will redesign the assignment so it can't be done with AI next time. I had one student complain that the weekly homework was hard to do and they were annoyed because Claude and ChatGPT was useless in completing the work. I told them that was a compliment, and I will endeavor to hear that more from students.”

A surveyed professor sums up a common stance on how to use these tools: “It's the conversation with the LLM that's valuable, not the first response. This is also what I try to teach students. Use it as a thought partner, not a thought substitute.”

The ETIH Innovation Awards 2026

The EdTech Innovation Hub Awards celebrate excellence in global education technology, with a particular focus on workforce development, AI integration, and innovative learning solutions across all stages of education.

Now open for entries, the ETIH Innovation Awards 2026 recognize the companies, platforms, and individuals driving transformation in the sector, from AI-driven assessment tools and personalized learning systems, to upskilling solutions and digital platforms that connect learners with real-world outcomes.

Submissions are open to organizations across the UK, the Americas, and internationally. Entries should highlight measurable impact, whether in K–12 classrooms, higher education institutions, or lifelong learning settings.

Winners will be announced on 14 January 2026 as part of an online showcase featuring expert commentary on emerging trends and standout innovation. All winners and finalists will also be featured in our first print magazine, to be distributed at BETT 2026.

To explore categories and submit your entry, visit the ETIH Innovation Awards hub.