No More Marking reports new findings from national AI assessment projects

No More Marking has released new data on its AI-supported Comparative Judgement model, including results from writing and history assessments completed this term.

No More Marking says it has expanded use of its AI-supported Comparative Judgement system across national and bespoke projects this term in the UK. The company provides large-scale assessment services for schools, using comparative judgement to score writing and other open-response tasks.

The organization reports that its Comparative Judgement plus AI model, first trialed in March, is now standard across its assessment offer. The model has been used to assess more than 200,000 pieces of student writing, and No More Marking has completed its first nationally standardised history assessment.

Daisy Christodoulou, Director of Education, says: “The Comparative Judgement plus AI model, which we developed earlier this year and trialled in March, is now available as standard for all of our national & bespoke assessments.”

New data shows close alignment between AI and human judging

The company reports that results from this term’s Year 3 writing assessment show close agreement between human- and AI-judged groups. Around half of participating schools opted to use AI judges, which enabled comparison across approaches.

According to No More Marking, both groups produced an identical overall mean score of 493. The standard deviation for the AI-judged group was 39, compared with 46 for human judges. The company notes that the smaller spread indicates fewer extreme scores, though the cause is unclear.

The organization highlights these findings as further evidence of alignment between human and AI decision-making in writing assessments.

Comparative Judgement shows higher correlations than absolute scoring

No More Marking says the latest data reinforces its position that AI performs better at Comparative Judgement than at absolute judgement. The company previously tested an absolute-judgement approach and found correlations of 0.5 between scores on consecutive assessments, compared with 0.7+ when using human Comparative Judgement.

Approximately 23,000 students who completed this term’s Year 3 assessment also took part in a similar Year 2 assessment in February, judged entirely by humans. No More Marking reports that both the AI and human judges from the October assessment showed strong correlations with the February results.

Christodoulou writes in the update that this provides “another data point showing that the AI is as good as humans.”

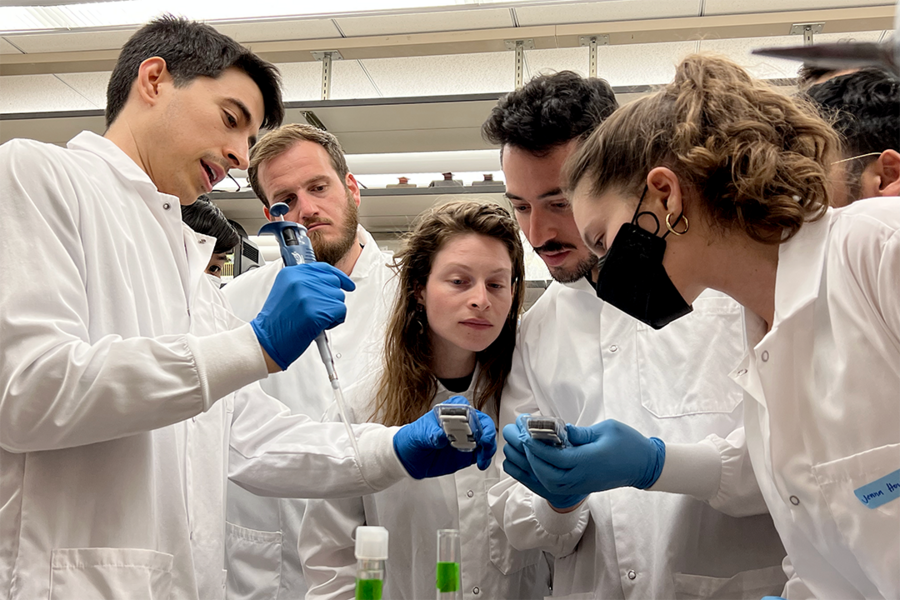

First national history assessment completed

The organization also reports the completion of its first nationally standardised history assessment, with 25 schools and just over 4,000 students participating. AI judges were used to reduce the time burden on teachers, with average judging time reported as just under 20 minutes per teacher.

The company states that AI agreed with human decisions 77 percent of the time. While this is lower than the 85 percent agreement typically seen in writing, No More Marking says early feedback from schools indicates that results were coherent and aligned with expectations.

The organization notes increasing use of its platform for custom AI assessments in subjects beyond English and history. These projects are not nationally standardised but use the same AI-supported Comparative Judgement approach. Christodoulou concludes: “We have just completed our first national history assessment.”