OpenAI says GPT-5.2 makes math and science work more reliable for researchers

OpenAI points to stronger benchmark results and a new case study to argue that GPT-5.2 improves consistency and reliability in scientific and mathematical research workflows.

OpenAI is positioning GPT-5.2 as a step forward for scientific and mathematical workflows, pointing to stronger performance on graduate-level science questions and expert-level math evaluations, as well as a case study where the model helped resolve an open problem in statistical learning theory.

The company says the improvements matter most where precision and multi-step reasoning are required, including tasks such as coding, data analysis, modeling, and experimental design. OpenAI frames the update as part of a broader effort to support research exploration while keeping human verification central.

Stronger benchmark performance where precision matters

OpenAI highlights results on GPQA Diamond, a benchmark focused on multiple-choice questions in physics, chemistry, and biology. GPT-5.2 Pro achieved 93.2 percent accuracy, followed by GPT-5.2 Thinking at 92.4 percent, with reasoning effort set to maximum and no external tools enabled.

On FrontierMath (Tier 1–3), an evaluation designed to test expert-level mathematics, GPT-5.2 Thinking reached 40.3 percent accuracy. In this evaluation, a Python tool was enabled and reasoning effort was set to maximum. OpenAI says the results reflect stronger general reasoning and abstraction rather than narrow task-specific gains.

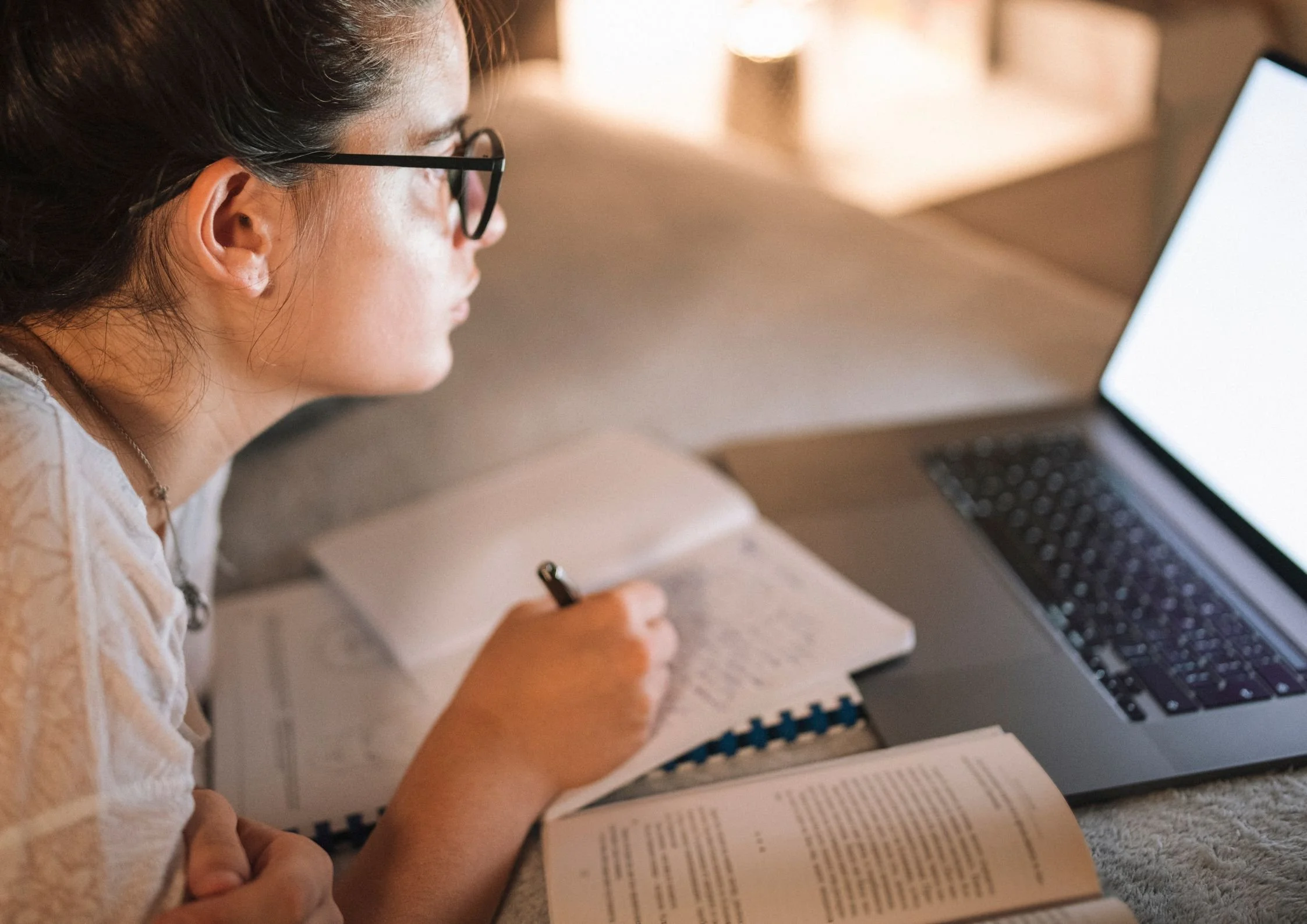

A case study on an open problem in learning theory

OpenAI’s announcement centers on a new paper, On Learning-Curve Monotonicity for Maximum Likelihood Estimators, which documents how GPT-5.2 Pro was used to address an open research question in statistical learning theory.

The problem asks whether model performance reliably improves as more data is added, a question that affects how learning curves are interpreted in real-world modeling. According to OpenAI, researchers asked GPT-5.2 Pro to solve the problem directly, without providing a proof outline or intermediate steps. Human researchers then focused on verification, review, and validation, including external expert checks.

OpenAI says follow-up exploration extended the result to higher-dimensional settings and other common statistical models, with the human role remaining focused on evaluation and clarity rather than mathematical scaffolding.

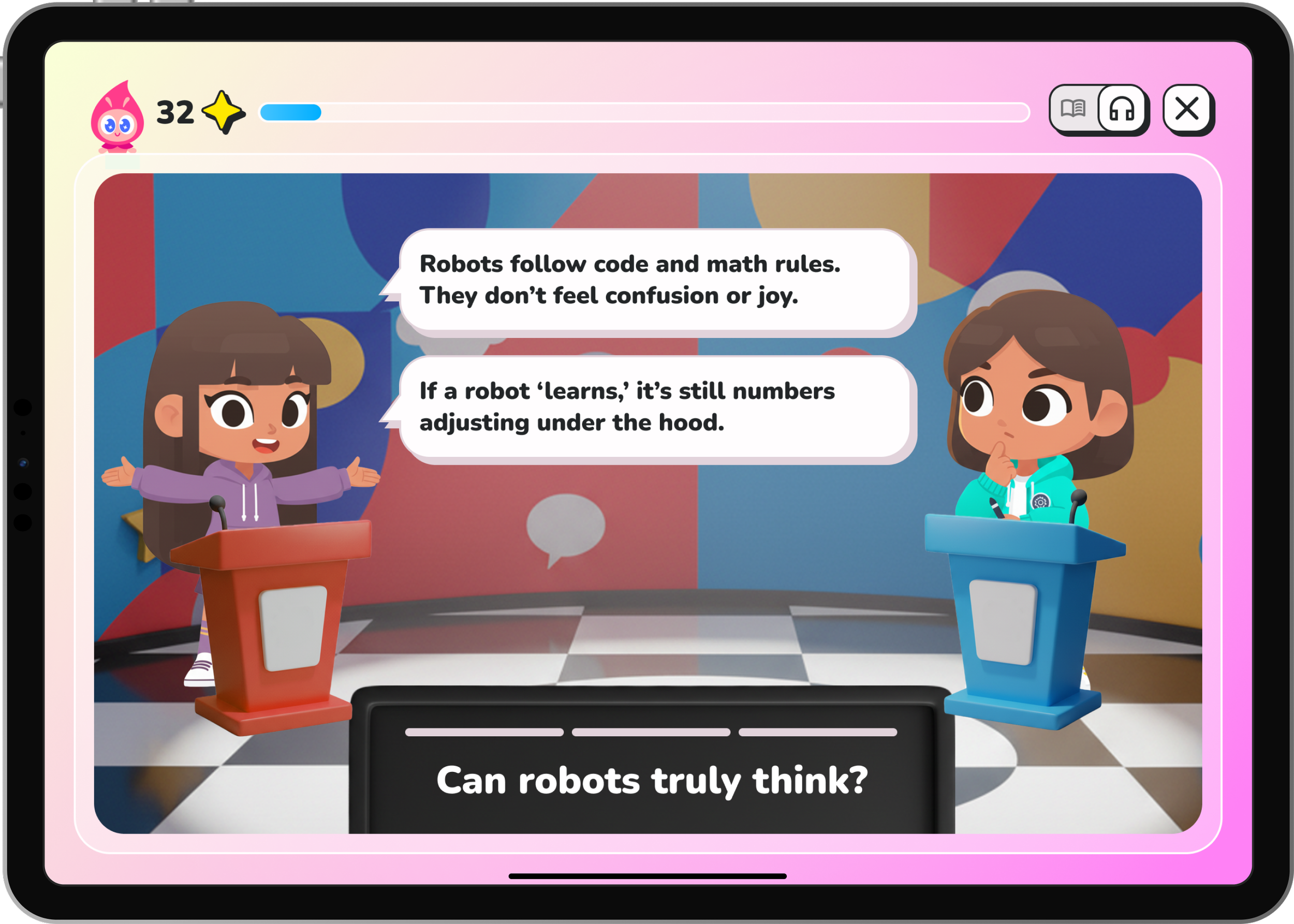

Implications for research and EdTech

OpenAI positions the case study as an example of how AI systems can support research in domains with strong theoretical foundations, such as mathematics and theoretical computer science. The company stresses that these models are not independent researchers and still require expert judgment, transparency, and validation.

For EdTech, the same reasoning improvements may translate into more reliable math tutoring, science explanations, coding support, and analytical tools, particularly where consistency across longer chains of reasoning is critical. OpenAI emphasizes that careful workflows, rather than automation alone, are key to responsible use in both research and education.