Harvard, Berkeley and others push for science-led AI policy in new multi-institution study

New publication outlines proposals for using scientific evidence to guide AI regulation, building on state-level work in California.

A group of researchers from multiple academic and policy institutions, including the University of California, Berkeley; Stanford University; Harvard University; Princeton University; Brown University; the Institute for Advanced Study; and the Carnegie Endowment for International Peace, have co-authored a new article in Science advocating for evidence-based approaches to artificial intelligence regulation.

Advancing science- and evidence-based AI policy was published on July 31 and includes contributions from Jennifer Chayes, Ion Stoica, Dawn Song, and Emma Pierson at UC Berkeley.

It proposes that AI policy be guided by scientific analysis, with clear mechanisms in place to generate and apply credible, actionable evidence.

Framework for evidence-driven regulation

The authors outline three components of an evidence-based policy model: how evidence should shape policy decisions, the current state of evidence across AI domains, and how regulatory efforts can accelerate new evidence production.

They note that defining what constitutes credible evidence is a foundational challenge: “Defining what counts as (credible) evidence is the first hurdle for applying evidence-based policy to an AI context – a task made more critical since norms for evidence vary across policy domains.”

The article warns against using evolving evidence as a reason for inaction or regulatory delay, highlighting previous cases where industries misused scientific claims to avoid oversight.

Recommendations and earlier California report

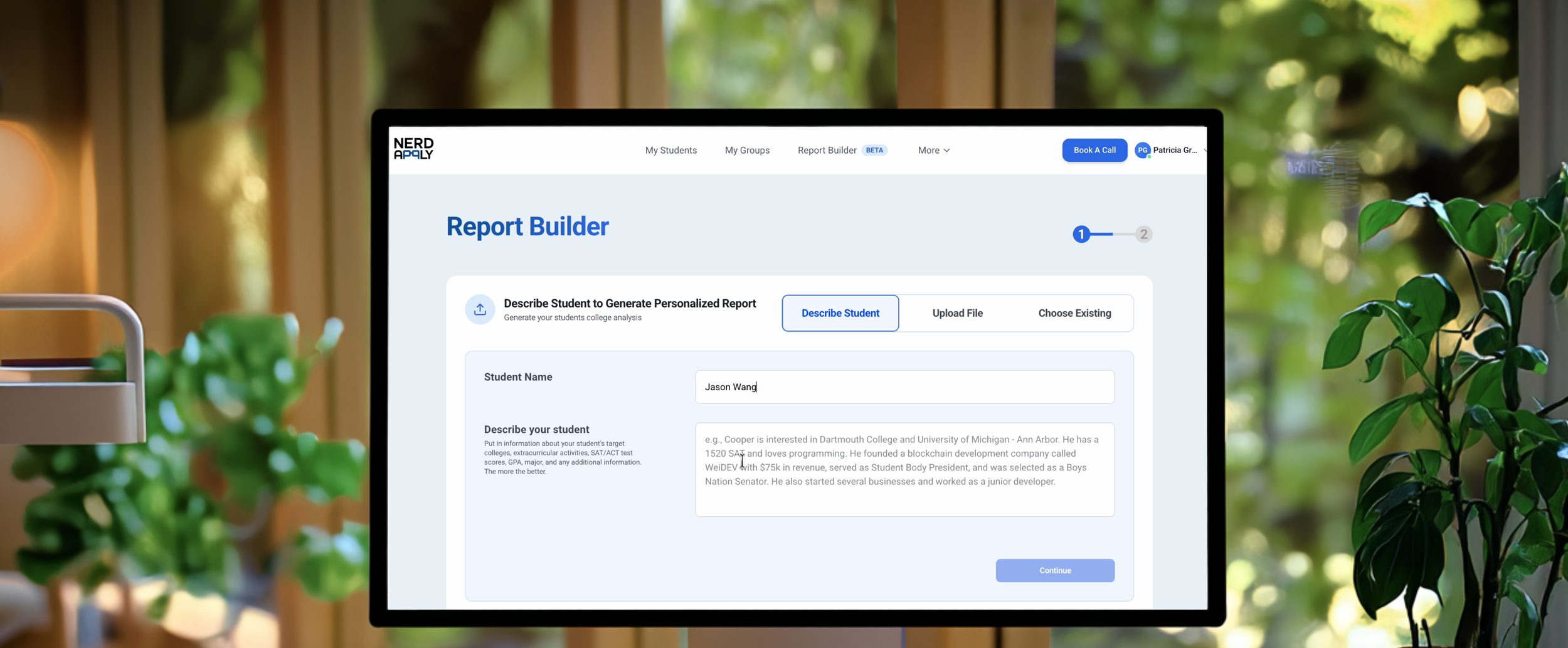

Policy recommendations in the article include requiring more safety disclosures from AI companies, incentivizing model evaluations before release, monitoring for post-deployment harms, protecting third-party researchers, and strengthening social safeguards.

“Evidence-based AI policy would benefit from evidence that is not only credible but also actionable,” the authors write. “A focus on marginal risk, meaning the additional risks posed by AI compared to existing technologies like internet search engines, will help identify new risks and how to appropriately intervene to address them.”

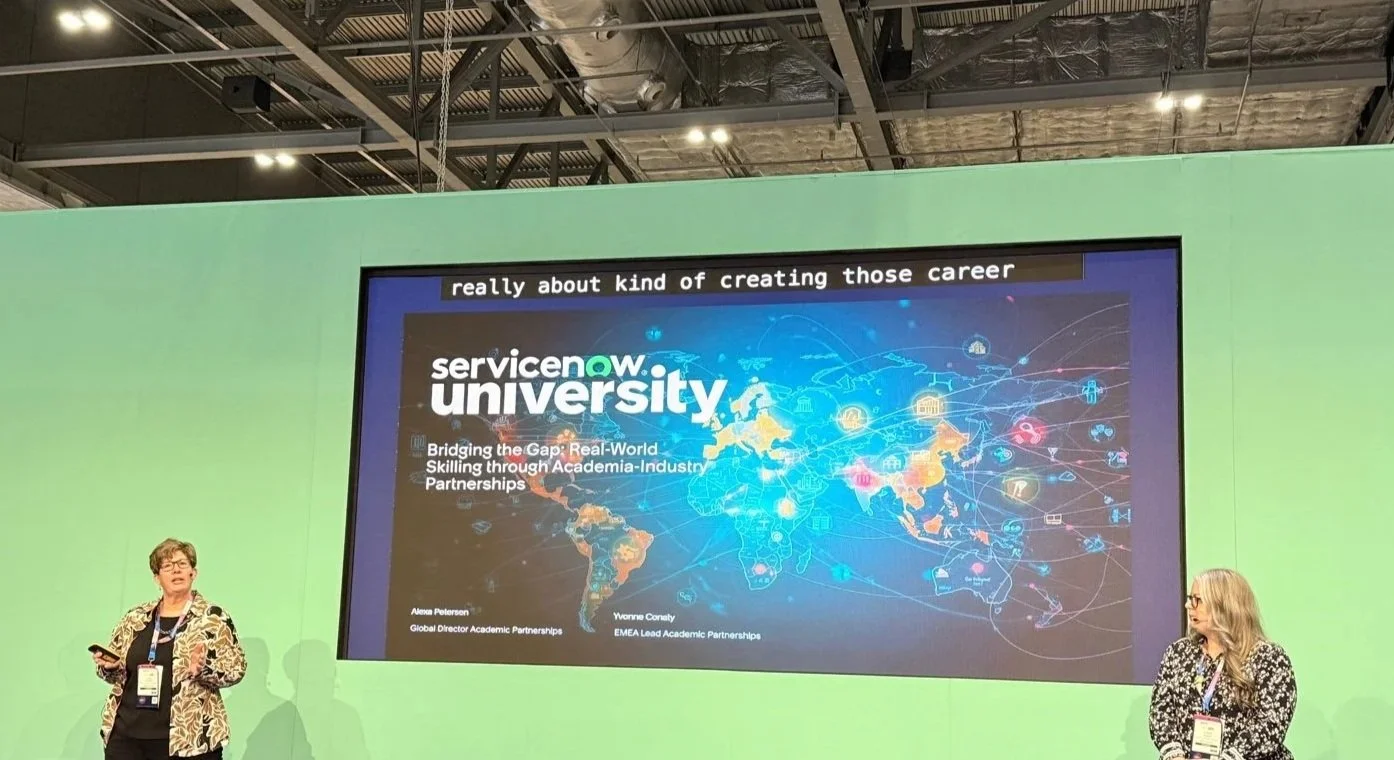

The article builds on recent work by the Joint California Policy Working Group on AI Frontier Models, which was co-led by Chayes. That group’s final report — The California Report on Frontier AI Policy — was submitted last month to Governor Gavin Newsom and has been referenced by California legislators and state agencies involved in AI policymaking.

The authors conclude, “We recognize that coalescing around an evidence-based approach is only the first step in reconciling many core tensions. Extensive debate is both healthy and necessary for democratically legitimate policymaking; such debate should be grounded in available evidence.”