GPT-5.2 targets everyday professional work, long-running agents, and science workloads

The AI research and deployment company, which develops frontier models and tools including ChatGPT and its enterprise platform, says GPT-5.2 is designed to handle complex, multi-step tasks that span spreadsheets, presentations, code, images, and long documents, while also improving reasoning and tool use for agentic workloads.

OpenAI executives take to LinkedIn as GPT-5.2 rolls out

OpenAI formally introduced GPT-5.2 in a detailed product blog, while senior executives took to LinkedIn to underline where they see the model fitting into real-world workflows.

Fidji Simo, CEO, Applications at OpenAI, frames the release in terms of everyday work across sectors. Simo says, “GPT-5.2 is here and it’s the best model out there for everyday professional work.”

That positioning reflects the focus of the blog, which highlights general intelligence improvements, stronger performance on structured tasks, and better support for agents that run over longer timeframes. OpenAI says GPT-5.2 is now rolling out in three main variants inside ChatGPT, branded as GPT-5.2 Instant, GPT-5.2 Thinking, and GPT-5.2 Pro, with availability starting on paid plans and expanding from there.

On LinkedIn, Kevin Weil, VP OpenAI for Science, emphasized the scientific side of the release. Weil says, “Today we’re launching GPT 5.2, OpenAI’s most powerful model ever. It’s the most advanced model for coding, professional work, long-running agents.”

Weil also points to results on specialist benchmarks and early work with researchers. He says GPT-5.2 “achieved: * 92.4% on GPQA Diamond (PhD-level questions across a range of sciences) * 40.3% on Frontier Math (up from GPT 5.1's 31% just a month ago!) * 70.9% on GDPval, assessing professional work across 44 occupations.”

GPT-5.2 is pitched as a work engine, not just a chat upgrade

OpenAI’s blog repeatedly ties GPT-5.2 to “professional knowledge work” rather than general use. The company says GPT-5.2 is “the most capable model series yet for professional knowledge work” and reports that “the average ChatGPT Enterprise user says AI saves them 40–60 minutes a day, and heavy users say it saves them more than 10 hours a week.”

GPT-5.2 Thinking is positioned as the main tool for complex workflows. OpenAI says it “is the best model yet for real-world, professional use” and cites results on GDPval, “an eval measuring well-specified knowledge work tasks across 44 occupations.”

According to the company, “GPT-5.2 Thinking beats or ties top industry professionals on 70.9% of comparisons on GDPval knowledge work tasks, according to expert human judges.” Tasks include creating “sales presentations, accounting spreadsheets, urgent care schedules, manufacturing diagrams, or short videos.”

OpenAI also links the new series to specific productivity gains in spreadsheet modeling. On an internal benchmark of junior investment banking analyst tasks, the company reports that GPT-5.2 Thinking’s average score “is 9.3% higher than GPT-5.1’s, rising from 59.1% to 68.4%.”

Coding, agents, and long context get measurable upgrades

On the engineering side, OpenAI says GPT-5.2 Thinking “sets a new state of the art of 55.6% on SWE-Bench Pro, a rigorous evaluation of real-world software engineering.” The benchmark covers four languages and is designed to be “more contamination-resistant, challenging, diverse, and industrially relevant” than earlier tests.

For everyday use, OpenAI claims this translates into a model that can “more reliably debug production code, implement feature requests, refactor large codebases, and ship fixes end-to-end with less manual intervention.”

Early testing feedback cited in the blog suggests stronger performance for front-end tasks and “complex or unconventional UI work” including 3D elements. That aligns with OpenAI’s move to show examples where GPT-5.2 produces single-file web apps with interactive elements from a single prompt.

The company also stresses tool-calling improvements that matter for agentic use cases. GPT-5.2 Thinking “achieves a new state of the art of 98.7% on Tau2-bench Telecom,” a benchmark for multi-turn customer support tasks that require reliable tool use. For more latency-sensitive work, OpenAI notes that GPT-5.2 Thinking “performs much better at reasoning.effort='none', substantially outperforming GPT-5.1 and GPT-4.1.”

For long-context scenarios, GPT-5.2 Thinking “sets a new state of the art in long-context reasoning” on OpenAI’s MRCRv2 evaluation and is described as “the first model we’ve seen that achieves near 100% accuracy on the 4-needle MRCR variant (out to 256k tokens).” The company argues this enables deep work over contracts, research papers, transcripts, and multi-file projects without losing coherence.

Vision is another area where benchmarks move materially. OpenAI says GPT-5.2 Thinking cuts error rates “roughly in half” on chart reasoning and software interface understanding, including “CharXiv Reasoning” for scientific figures and “ScreenSpot-Pro” for GUI screenshots.

Science workloads and reasoning benchmarks move beyond headline claims

Weil’s LinkedIn post emphasizes that GPT-5.2 is “the world’s best model for assisting and accelerating scientists” in OpenAI’s view. The blog backs that up with detailed results on science and reasoning benchmarks.

On GPQA Diamond, which the company describes as “graduate-level Google-proof Q&A” across physics, chemistry, and biology, GPT-5.2 Pro achieves 93.2 percent accuracy, with GPT-5.2 Thinking at 92.4 percent. No tools are enabled on this test.

On FrontierMath, GPT-5.2 Thinking “set a new state of the art, solving 40.3% of problems,” up from 31 percent for GPT-5.1. OpenAI also outlines work where GPT-5.2 Pro contributed to resolving “an open question in statistical learning theory” in a “narrow, well-specified setting,” with the proposed proof later verified by authors and reviewed with external experts.

The model family also posts higher scores on ARC-AGI-1 and ARC-AGI-2, two benchmarks focused on abstract reasoning. GPT-5.2 Pro is “the first model to cross the 90% threshold” on ARC-AGI-1, while GPT-5.2 Thinking reaches 52.9 percent on ARC-AGI-2, which is designed to isolate fluid reasoning. GPT-5.2 Pro goes higher still at 54.2 percent.

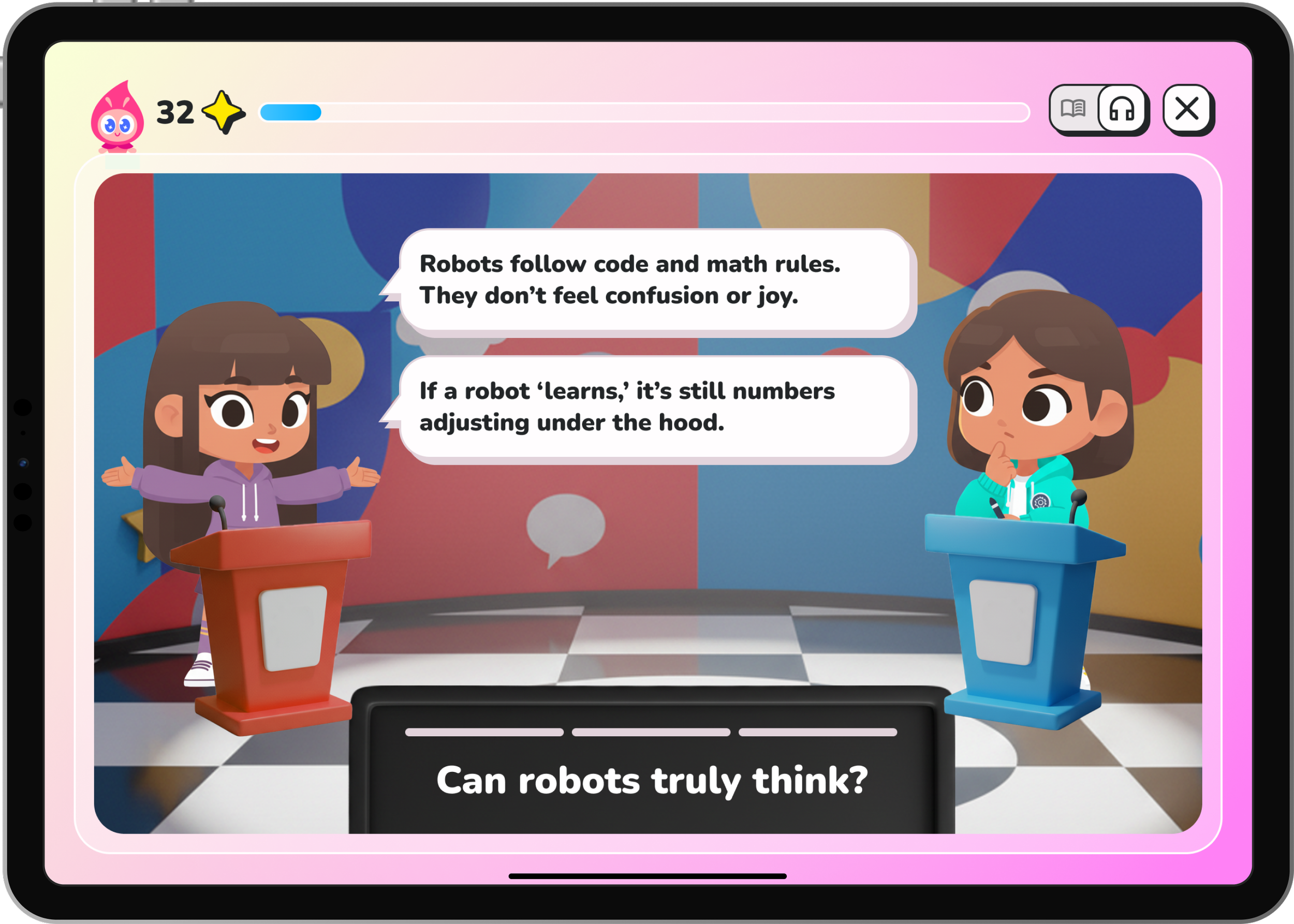

Taken together, these benchmarks move the narrative beyond generic “smarter model” claims and into specific score deltas and test conditions. For schools, universities, and EdTech developers, the question now becomes how far these gains translate into reliable performance in real assessment, tutoring, and research workflows, especially where hallucination risk and oversight requirements remain non-trivial.

Safety, hallucinations, and the ongoing need for oversight

OpenAI says GPT-5.2 Thinking hallucinates less often than GPT-5.1 Thinking on a set of de-identified ChatGPT queries. The company reports that “responses with errors were 30%rel less common” and that “for professionals, this means fewer mistakes when using the model for research, writing, analysis, and decision support.”

At the same time, the blog is explicit that “like all models, GPT-5.2 Thinking is imperfect” and adds a clear reminder: “For anything critical, double check its answers.”

On the safety side, GPT-5.2 builds on “safe completion” work introduced with GPT-5, teaching the model “to give the most helpful answer while still staying within safety boundaries.” OpenAI highlights “meaningful improvements” in how models respond to prompts indicating “signs of suicide or self harm, mental health distress, or emotional reliance on the model.”

The company is also “in the early stages of rolling out our age prediction model” to automatically apply content protections for users under 18 and says GPT-5.2 is “one step in an ongoing series of improvements” rather than a finishing point.

This is an important caveat for education and workplace deployments. The benchmarks are strong, but OpenAI is clear that oversight remains essential, especially in high-stakes domains, and that work on refusals and reliability is still active.

Packaging, pricing, and what this means for developers

In ChatGPT, GPT-5.2 comes in three main variants: GPT-5.2 Instant, GPT-5.2 Thinking, and GPT-5.2 Pro. OpenAI says Instant is “a fast, capable workhorse for everyday work and learning,” Thinking is “designed for deeper work” such as coding, long-document summarization, and planning, and Pro is “our smartest and most trustworthy option for difficult questions where a higher-quality answer is worth the wait.”

Rollout starts with Plus, Pro, Go, Business, and Enterprise plans, with GPT-5.1 kept available for three months under legacy models before being sunset in ChatGPT. On the API side, GPT-5.2 Thinking is exposed as gpt-5.2, GPT-5.2 Instant as gpt-5.2-chat-latest, and GPT-5.2 Pro as gpt-5.2-pro.

Pricing reflects the performance tier. GPT-5.2 and gpt-5.2-chat-latest are priced at “$1.75/1M input tokens and $14/1M output tokens, with a 90% discount on cached inputs.” GPT-5.2 Pro is higher at “$21” per million input tokens and “$168” per million output tokens.

OpenAI acknowledges that “GPT-5.2 is priced higher per token than GPT-5.1 because it is a more capable model,” but argues that on many agentic evaluations “the cost of attaining a given level of quality ended up less expensive due to GPT-5.2’s greater token efficiency.”

For EdTech platforms and institutional buyers, this trade-off between higher per-token pricing and potential overall efficiency will be a key point of evaluation, particularly for always-on agents, assessment tools, and content pipelines.

OpenAI also notes it has “no current plans to deprecate GPT-5.1, GPT-5, or GPT-4.1 in the API” and commits to providing “ample advance notice” before any deprecations.

GPT-5.2 was developed “in collaboration with our long-standing partners NVIDIA and Microsoft,” with Azure data centers and NVIDIA GPUs including H100, H200, and GB200-NVL72 providing the infrastructure for training and deployment.

Summing up the release and the wider roadmap, OpenAI positions GPT-5.2 as progress rather than a final destination. As the blog puts it, “GPT-5.2 is one step in an ongoing series of improvements, and we’re far from done. While this release delivers meaningful gains in intelligence and productivity, we know there are areas where people want more. In ChatGPT, we’re working on known issues like over-refusals, while continuing to raise the bar on safety and reliability overall. These changes are complex, and we’re focused on getting them right.”

The ETIH Innovation Awards 2026

The EdTech Innovation Hub Awards celebrate excellence in global education technology, with a particular focus on workforce development, AI integration, and innovative learning solutions across all stages of education.

Now open for entries, the ETIH Innovation Awards 2026 recognize the companies, platforms, and individuals driving transformation in the sector, from AI-driven assessment tools and personalized learning systems, to upskilling solutions and digital platforms that connect learners with real-world outcomes.

Submissions are open to organizations across the UK, the Americas, and internationally. Entries should highlight measurable impact, whether in K–12 classrooms, higher education institutions, or lifelong learning settings.