University of Gloucestershire Senior Lecturer warns that AI could pose a risk to human knowledge

A Senior Lecturer at University of Gloucestershire (UoG) has warned that AI could pose a risk to the integrity of human knowledge, and says that AI literacy can help prevent manipulation.

Dr Richard Cook, a researcher and senior lecturer in cybersecurity at UoG, claims it is becoming increasingly difficult to distinguish between fact and fiction - and this could have “serious implications” for society.

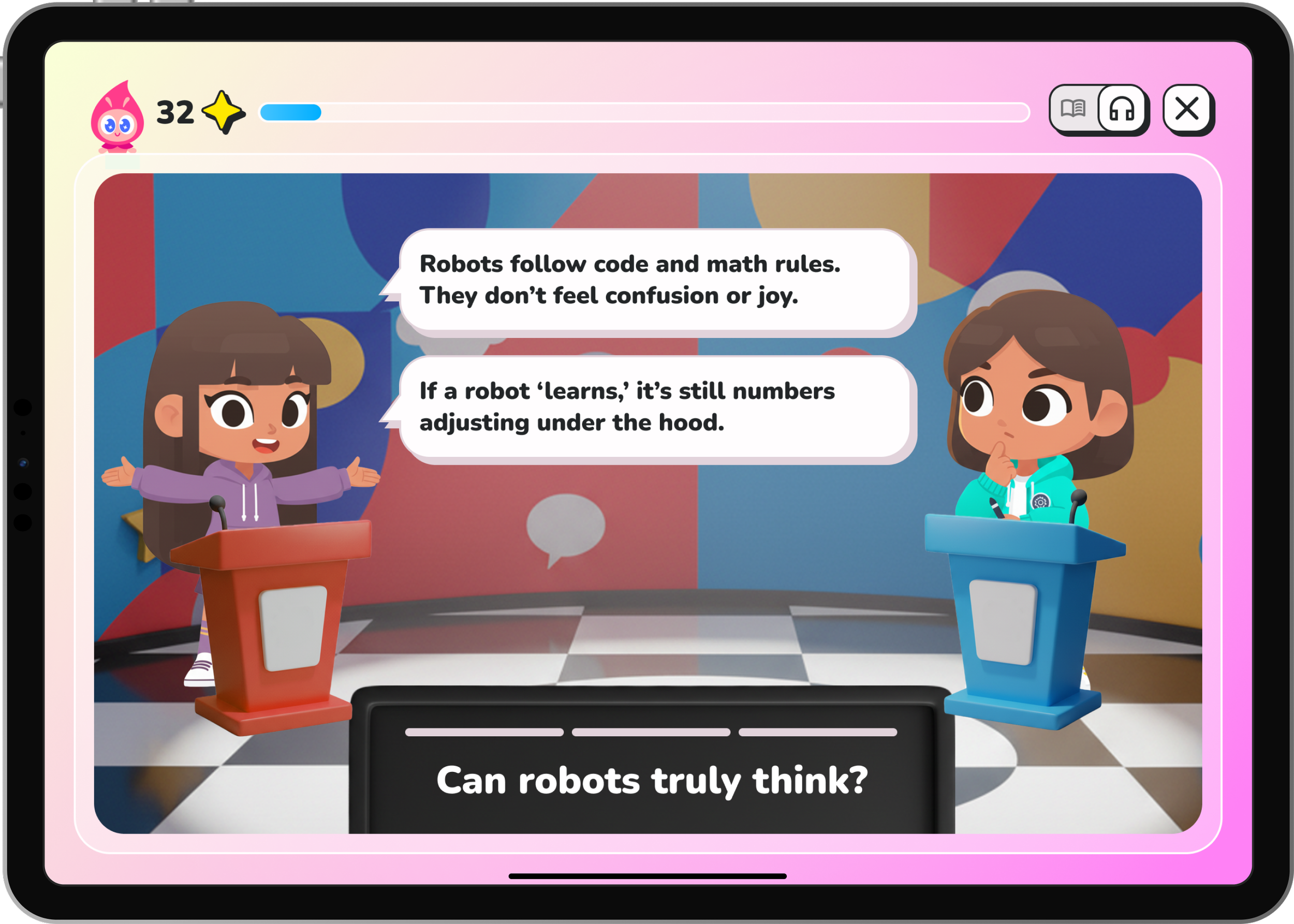

In a new paper titled Minds and Machine: Artificial Intelligence, Algorithms, Ethics and Order in Global Society, Dr Cook says AI systems are generating misinformation, disinformation and malinformation.

“Our information ecosystem is being polluted by ‘nonsense’ – meaning that has been knowingly messed with – which is often mistaken for knowledge,” Dr Cook says.

"Nonsense can be thought of in the same way as plastics in the seas – it is a form of pollution of human knowledge. AI is playing an active role in creating nonsense and it is sometimes intentional.”

Dr Cook adds that our natural tendency to believe what we see or hear is being exploited by AI.

“The more human-like AI appears, the more convincing it becomes — often irrespective of factual accuracy. Alongside this, a natural human tendency for ‘parasocial intimacy’ and ‘social proof’ intersect to create a powerful illusion of credibility in AI that opens us up to manipulation, deception and interference. Over time, this could weaken our shared understanding of reality, as people unknowingly accept untruths and act upon AI generated nonsense,” he adds.

As a result, Dr Cook argues in favour of stronger tools assessing the health of AI systems, similar to the warning labels sometimes included on food products.

“AI development moves faster than regulation,” Dr Cook adds. “There are currently no enforceable guidelines requiring AI developers to be accountable. Without scrutinizing what is happening now and taking individual action, the line between truth and falsehood may soon disappear altogether with serious implications for society and the canon of knowledge we leave behind longer term.”