OpenAI exposes global AI scams and pushes for safer use of ChatGPT

OpenAI has revealed new insights into how artificial intelligence is being used by both scammers and individuals trying to avoid them. The update comes from a LinkedIn post by OpenAI Global Affairs, following comments from Jack Stubbs, a lead member of the company’s Intelligence and Investigations team, during the latest OpenAI Forum titled Scams in the Age of AI.

AI tools help expose global scam operations

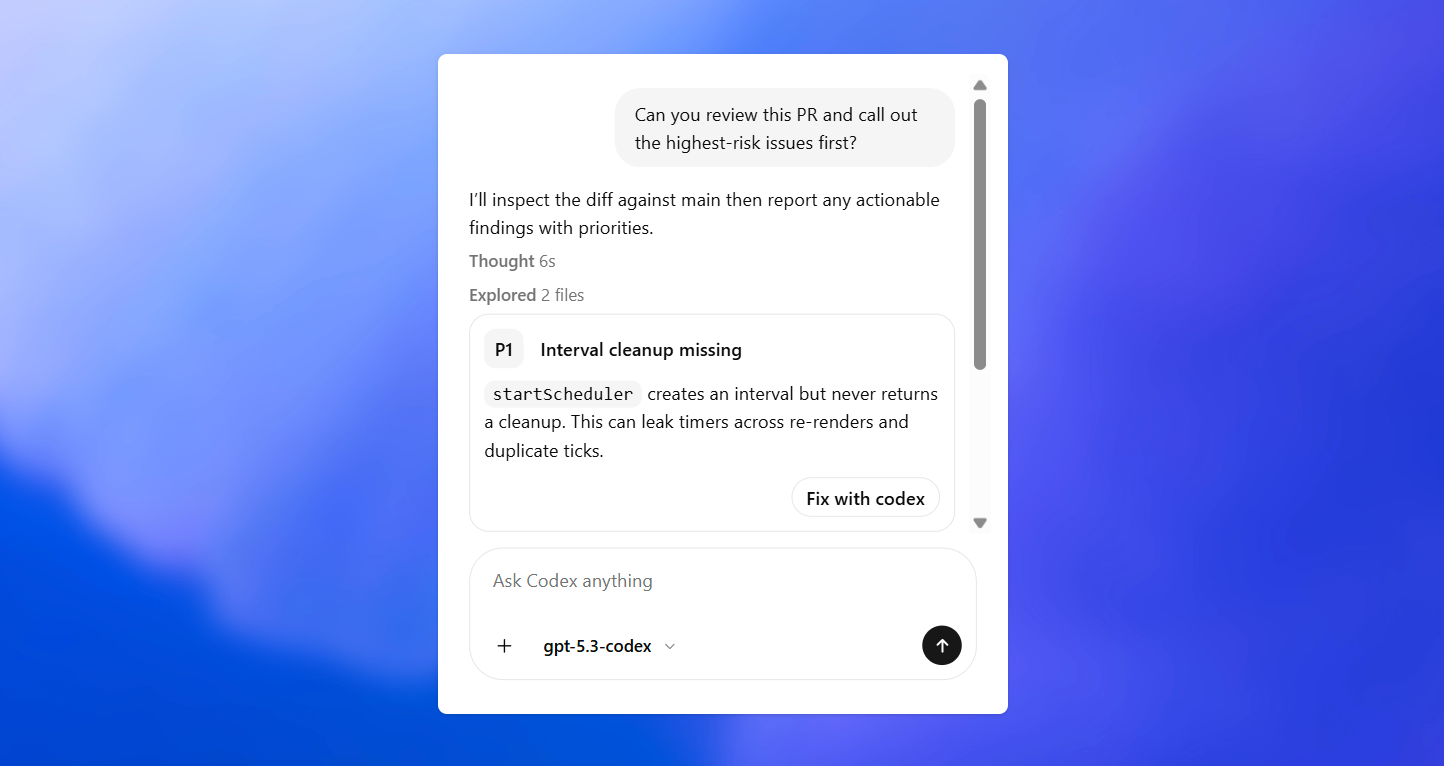

Stubbs described how his team investigates organized criminal networks attempting to exploit AI for scams, while also developing tools to help users recognize fraudulent behavior. He explained how scammers often rely on AI to streamline existing schemes rather than invent new ones.

“The reality is that the vast majority of scam activity we see is more prosaic,” says Stubbs. “It's more about fitting AI into an existing scam playbook rather than creating new playbooks built around AI.”

Over the past year, OpenAI’s team has helped disrupt operations in Cambodia, Myanmar, and Nigeria, identifying schemes involving fake job postings, fraudulent investment platforms, and AI-assisted scam logistics. Stubbs outlined a typical scam sequence as “ping, zing, and sting,” representing the contact, manipulation, and eventual extraction phases.

Public use of ChatGPT outpaces criminal misuse

While some criminal groups exploit AI tools, Stubbs noted that far more people use ChatGPT for the opposite purpose—to detect and avoid scams. “There are three times more scam-detection interactions with ChatGPT than there are attempts by scammers to misuse it,” he says.

He added that empowering individuals through accessible AI safety tools may have a greater long-term effect than enforcement alone. “AI needs to be part of the solution, not just part of the problem,” says Stubbs. "Using these technologies to provide everyone with an accessible, easy to use, reliable tool that they can have in their pocket and pull out whenever they need it to check whether something is a scam will do far more to prevent harm than any amount of scammers that we can detect and ban from our products.”

OpenAI has recently expanded its work on AI safety education through a new partnership with Older Adults Technology Services (OATS) from AARP, delivered via the OpenAI Academy. The multi-year collaboration will focus on helping older adults across the United States learn to use AI confidently and securely.