Claude shows speed advantage in Anthropic’s Project Fetch robot dog experiment

Anthropic has run a new internal study, Project Fetch, to examine how its Claude model affects human performance on real-world robotics tasks. The one-day experiment compared two teams of staff as they attempted to program a quadruped robot dog to fetch a beach ball, with only one group allowed to use Claude.

The company positioned the work as an uplift study, a method it uses to measure the performance gap between teams with and without AI access. Both groups were assigned a set of increasingly technical tasks involving connectivity, sensor access, manual control, and early-stage autonomy.

Anthropic reports a clear performance difference. Team Claude completed more tasks overall, and for tasks finished by both groups, “Team Claude succeeded in about half the time it took Team Claude-less.” The company adds that Team Claude completed seven of eight assigned tasks, while Team Claude-less completed six.

Connectivity and sensor access create the largest gap

The biggest differences appeared at the hardware interface level. Connecting to the robot and retrieving data from onboard sensors required sifting through conflicting online documentation and multiple connection pathways, some of which did not work as advertised.

Team Claude used the model to evaluate options, select a viable interface, and troubleshoot issues at speed. Team Claude-less prematurely abandoned the simplest method after misinterpreting documentation and spent a significant period unable to progress until organizers intervened with a hint.

Accessing lidar data presented similar issues. Team Claude-less advanced to the next phase using video alone and kept one member focused on the lidar task, only succeeding near the end of the day.

By the end of the trial, Team Claude had developed a system that could detect the beach ball, navigate toward it, and move it, though the robot still struggled to complete a reliable autonomous retrieval.

AI support improves workflow but introduces distractions

The blog notes that Team Claude wrote “about 9 times more code than Team Claude-less.” Having AI support encouraged team members to test multiple approaches in parallel, but also increased the risk of exploring dead ends. In a non-competitive setting, Anthropic suggests this amount of exploration could be beneficial, though it remains a point to watch in future evaluations.

Manual control and localization were areas where the Claude-less group sometimes moved faster. Once they achieved a stable video feed, Team Claude-less produced a control program and localization method more quickly. However, Anthropic reports the Claude-assisted controller was considerably easier to use, with continuous video rather than intermittent still images.

The company’s analysis identified a clear morale gap between groups. Team Claude-less encountered early setbacks, including an incident where the other team’s robot moved toward their table after a miscalculated command. They entered the lunch break without establishing a working connection to their own robot.

Anthropic used Claude to analyze transcripts from both groups, tracking emotional tone and frequency of questions. The company found that “Team Claude-less’s dialogue was more negative” and that expressions of confusion were double that of Team Claude. The Claude-less group also asked more questions of one another, reflecting a heavier reliance on internal collaboration when AI support was not available.

Anthropic notes the small scale and short duration of the experiment. The study involved only two teams over a single day, and all participants were Anthropic employees with day-to-day exposure to Claude. The company says differences might be smaller among people less familiar with the model.

Early signal for future robotics capability

Despite these limits, Anthropic positions Project Fetch as an early indicator of how frontier models may interact with hardware as capabilities expand. It connects the work to its Responsible Scaling Policy, which tracks conditions under which models might begin autonomously contributing to AI R&D or physical-world tasks.

Anthropic writes that “the idea of powerful, intelligent, and autonomous AI systems using some of their intelligence and power to act in the world via robots is not as outlandish as it may sound.”

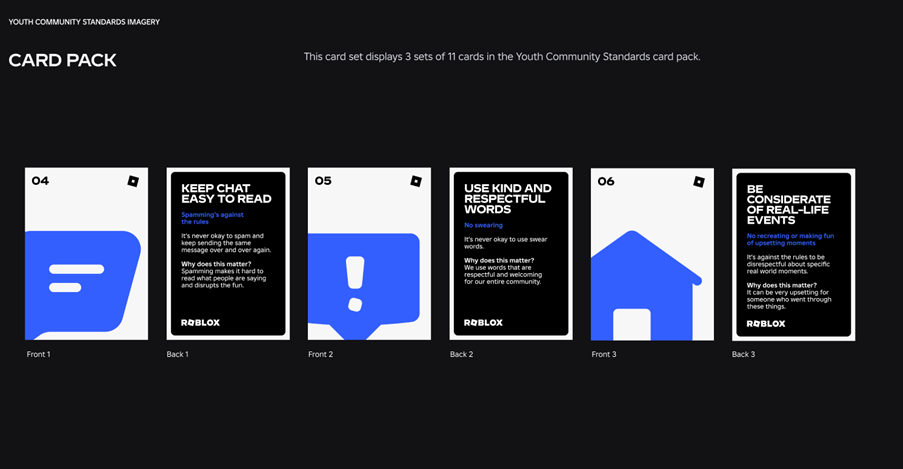

The ETIH Innovation Awards 2026

The EdTech Innovation Hub Awards celebrate excellence in global education technology, with a particular focus on workforce development, AI integration, and innovative learning solutions across all stages of education.

Now open for entries, the ETIH Innovation Awards 2026 recognize the companies, platforms, and individuals driving transformation in the sector, from AI-driven assessment tools and personalized learning systems, to upskilling solutions and digital platforms that connect learners with real-world outcomes.

Submissions are open to organizations across the UK, the Americas, and internationally. Entries should highlight measurable impact, whether in K–12 classrooms, higher education institutions, or lifelong learning settings.