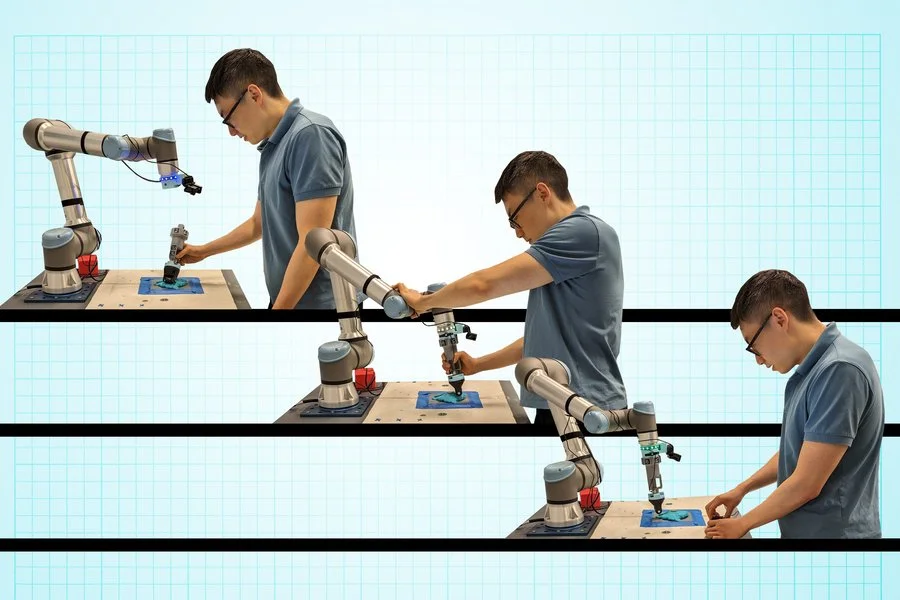

MIT unveils new interface that lets anyone train collaborative robots in three different ways

Handheld tool allows robots to learn tasks through remote control, guided movement, or direct human demonstration, opening flexible training options for factories and future caregiving settings.

Photo credit: MIT researchers

The tool, called a versatile demonstration interface (VDI), can attach to common collaborative robotic arms and allows users to teach tasks by remote control, by physically guiding the robot, or by performing the task themselves while the robot records the motions.

This approach combines three training methods, teleoperation, kinesthetic teaching, and natural demonstration, into one system.

Mike Hagenow, a postdoc in MIT’s Department of Aeronautics and Astronautics, says the goal is to make robots more adaptable to a range of users and environments. “We are trying to create highly intelligent and skilled teammates that can effectively work with humans to get complex work done,” Hagenow says. “We believe flexible demonstration tools can help far beyond the manufacturing floor, in other domains where we hope to see increased robot adoption, such as home or caregiving settings.”

Testing in real-world scenarios

The research team tested the VDI with manufacturing volunteers who used it to train a robotic arm on two tasks commonly found on factory floors: press-fitting pegs into holes and molding a dough-like material around a rod. Participants used all three teaching modes.

Results showed users generally preferred natural demonstration over teleoperation and kinesthetic training. However, participants noted that each method has specific advantages. Teleoperation may be better for hazardous tasks, kinesthetic training can help with adjusting robots handling heavy items, and natural teaching is useful for precise, delicate work.

Next steps for robot learning

The interface is equipped with cameras and force sensors that record position, movement, and pressure. These data allow the robot to learn the task once the interface is reattached. The team plans to refine the tool’s design based on feedback and further test its ability to help robots adapt to a range of tasks.

Hagenow says the study highlights the need for flexible robot training in workplaces where robots may support multiple activities. “We imagine using our demonstration interface in flexible manufacturing environments where one robot might assist across a range of tasks that benefit from specific types of demonstrations,” Hagenow says. “We view this study as demonstrating how greater flexibility in collaborative robots can be achieved through interfaces that expand the ways that end-users interact with robots during teaching.”