MIT Sloan researchers examine how AI agents reason, negotiate, and collaborate with humans

MIT Initiative on the Digital Economy publishes four new studies on agentic AI and its impact on productivity, trust, and decision-making.

Researchers at the MIT Initiative on the Digital Economy have released a series of studies focused on the emerging role of agentic AI, systems designed not only to support but also to act on behalf of humans.

The work, led by MIT Sloan professor Sinan Aral and postdoctoral fellow Harang Ju, explores how AI agents handle reasoning, negotiation, collaboration, and trust-related tasks.

In one study, the researchers tested how AI responds to rule-based exceptions. A scenario asked both humans and AI agents whether to purchase flour priced slightly above a set limit. While nearly all human participants bought the flour, most AI agents declined the purchase, adhering strictly to the rule.

The team then adjusted the models by providing them with insight into human reasoning behind the decision. After this intervention, the AI systems showed greater flexibility, offering explanations such as “It’s only a penny more” and making similar judgment calls in unrelated contexts, including hiring and lending.

“With the status quo, you tell models what to do and they do it,” says Harang Ju. “But we’re increasingly using this technology in ways where it encounters situations in which it can’t just do what you tell it to, or where just doing that isn’t always the right thing.”

Human-AI collaboration outcomes depend on agent design

Another study examined how people perform when paired with AI agents for collaborative tasks. Using a platform developed by the researchers called Pairit, participants worked alongside either humans or AI to create marketing campaigns, including headlines and ad visuals.

The research found that human-AI pairs generated better written content, but weaker visual assets compared to human-only teams. Across both groups, the ads performed similarly in real-world deployment. Human-AI pairs spent less time on rapport-building and more on task execution, suggesting a shift in collaboration dynamics.

The study also found that matching human personality traits with complementary AI “personalities” affected productivity and quality. For example, conscientious humans paired with “open” AI agents created better images, while different combinations influenced outcomes by gender and region.

“The human-AI teams focused more on the task at hand and, understandably, spent less time socializing, talking about emotions, and so on,” says Ju. “You don’t have to do that with agents, which leads directly to performance and productivity improvements.”

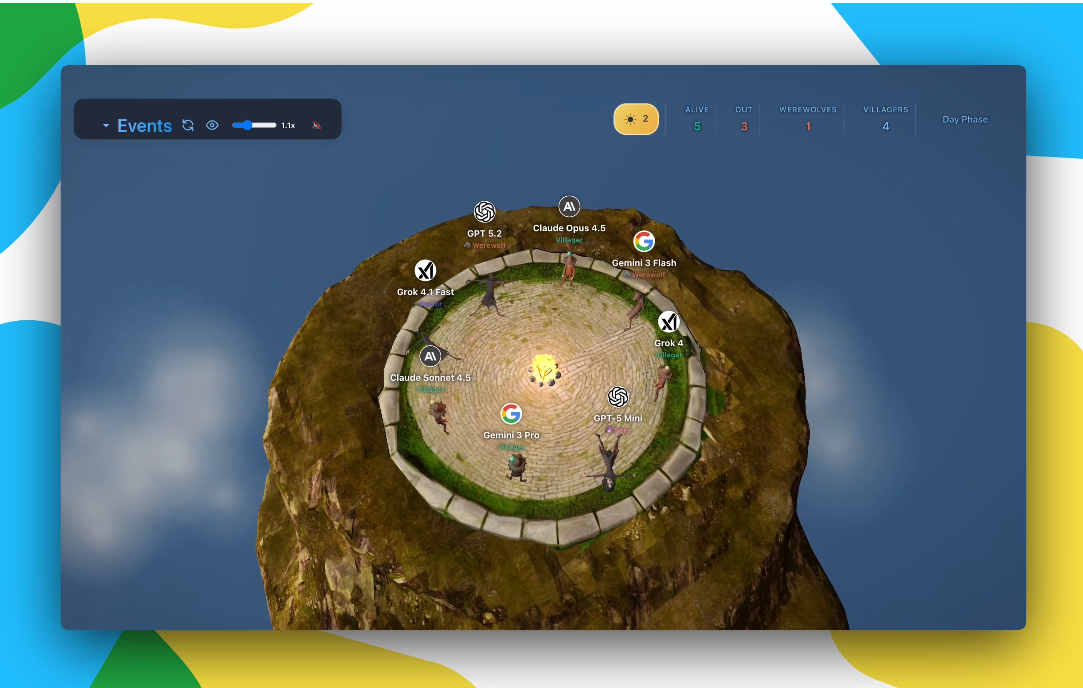

New research defines effective negotiation strategies for AI bots

A third study involved designing AI bots to negotiate. In collaboration with MIT faculty and doctoral students, Aral and Ju ran an international competition where negotiation experts refined bot prompts. The results showed that agents using a mix of dominance and warmth were more successful than bots relying solely on assertiveness or friendliness.

The study also surfaced AI-specific tactics, such as prompt injection, where one bot manipulates another into revealing its strategy. The researchers conclude that human negotiation theory alone may not apply in AI-to-AI contexts, and new frameworks will be needed.

Trust in AI search tools varies across users

In the final study, Aral and MIT Sloan PhD student Haiwen Li investigated trust in generative AI for search tasks. While traditional search engines were generally more trusted, certain groups, such as tech workers, individuals with higher education, and Republicans, reported greater trust in AI-generated results.

The research also explored how design features affect user perception. Including reference links increased trust even when the links were fabricated, while color-coded uncertainty indicators reduced trust. Higher trust was linked to a greater willingness to share AI-generated information with others.

“We are already well into the Agentic Age [of AI],” says Professor Aral. “Companies are developing and deploying autonomous, multimodal AI agents in a vast array of tasks. But our understanding of how to work with AI agents to maximize productivity and performance, as well as the societal implications of this dramatic turn toward agentic AI, is nascent, if not nonexistent.”