EdTechX Summit: How can we use AI responsibly in education?

A panel of experts at the EdTechX Summit in London considered how educators can harness the power of artificial intelligence (AI) in a responsible and ethical way.

As AI reshapes how educators teach, learn, and develop skills, the panel considers the potential risks of using AI in education and how these can be mitigated to ensure its power can still be used to improve outcomes.

Sian Cooke, Head of Education Technology Evidence and Adoption at the U.K.’s Department for Education (DfE), highlighted the recent resources published by the government on AI. The materials aim to help schools and colleges use AI safely and effectively.

“There is also a hardware challenge,” Cooke shared, noting that the DfE has put a lot of focus on “getting the basics right” such as access to wifi and laptops. “Teachers already have so much to worry about, they want technology that works,” she told delegates.

However, the DfE is also keen to promote the use of AI to increase efficiency. “We want to promote the use of this technology so teachers can focus on what they are best at,” Cooke explained. While she was hopeful that AI could unlock potential and help deliver great teaching for every child, she also shared fears that unequal access could create an increasing digital divide.

Professor Manolis Mavrikis, Professor in Artificial Intelligence in Education, at IOE - UCL's Faculty of Education and Society, told delegates that discussion on the use of new technologies in education have become polarized. “Even with positive use of EdTech we are seeing muddled arguments around screentime,” he explained.

Mavrikis argued that accumulating evidence supports the application of AI in the classroom. He also cautioned that there is a risk that students will continue to use the technology even if educators do not. “If we don’t show them the best way to use it then they will use it in a lazy way,” he explained, warning against its unregulated use.

Guadalupe Sampedro, Partner at law firm Cooley LLP, explained that any legal framework on the use of AI will be complicated to implement. “From a legal perspective, AI is very new and it’s constantly evolving,” she said. “We already have a very complete legal framework that is difficult to navigate for companies.”

With many companies struggling to train voice recognition for children due to data protection rules, Sampedro said some had found a workaround using synthetic data. “It’s not easy, but it’s doable,” she explained.

While Sampedro added many want reduced complexity, she explained that the EU’s General Data Protection Regulation (GDPR) and U.K. GDPR will make that difficult. That said, she admitted that legislation in the U.S. is currently “a bit of a Wild West” and called for consistency in the global approach.

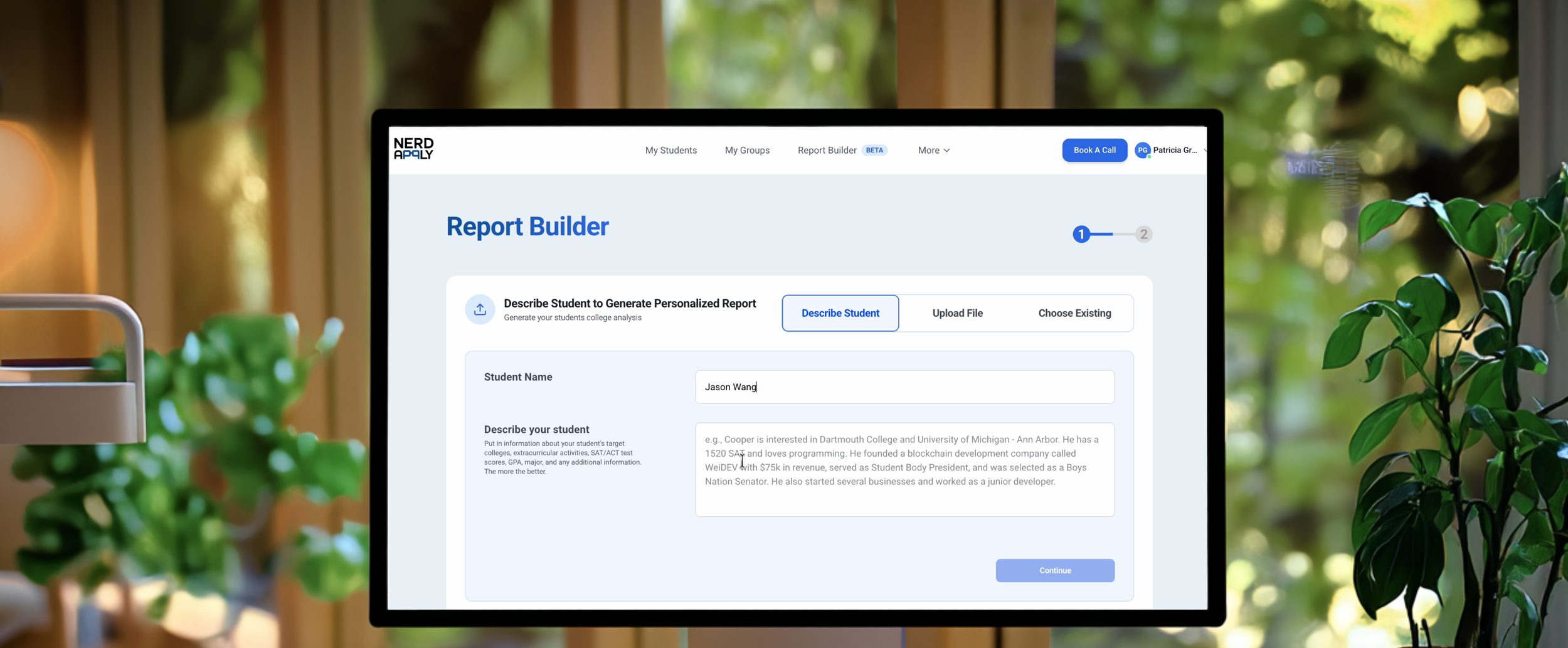

Joshua Wohle, CEO and Co-Founder at learning technology platform Mindstone said that AI-powered technology is already here and being used. He felt it was important that organizations work with this reality, rather than trying to prevent it being used. “The worst case scenario is that you force employees to use personal accounts and data gets used in an unsafe way,” he explained. “We have to make employees feel that they can use it.”

However, he did acknowledge that there was a need for proper data to power AI tools effectively. “If you are scared to give it data, it won’t work,” Wohle added. “AI can only be as useful as the data you feed it.”