UC Berkeley and UCSF researchers use AI to decode brain signals into near-real-time speech

New neuroprosthesis translates motor cortex activity into audible voice output for individuals with vocal-tract paralysis.

Researchers from the University of California, Berkeley and the University of California, San Francisco have developed a brain-computer interface that can decode speech-related brain activity into audible speech in near real time.

The latest development significantly reduces the processing delay seen in earlier systems, which previously required up to eight seconds to convert neural signals into sound.

The team used an AI-based approach to achieve speech decoding with speed comparable to commercial voice assistants. The system samples neural activity from the motor cortex—the part of the brain responsible for controlling vocal movements, and uses artificial intelligence to interpret those signals as spoken output.

Streaming algorithms adapted from commercial speech tech

“Our streaming approach brings the same rapid speech decoding capacity of devices like Alexa and Siri to neuroprostheses,” says Gopala Anumanchipalli, assistant professor of electrical engineering and computer sciences at UC Berkeley and co-principal investigator with UCSF’s Edward Chang. “Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming.”

Unlike past neuroprosthetic methods that generated slow or delayed speech synthesis, the system applies streaming algorithms capable of decoding and producing speech almost instantaneously.

Training AI without spoken data

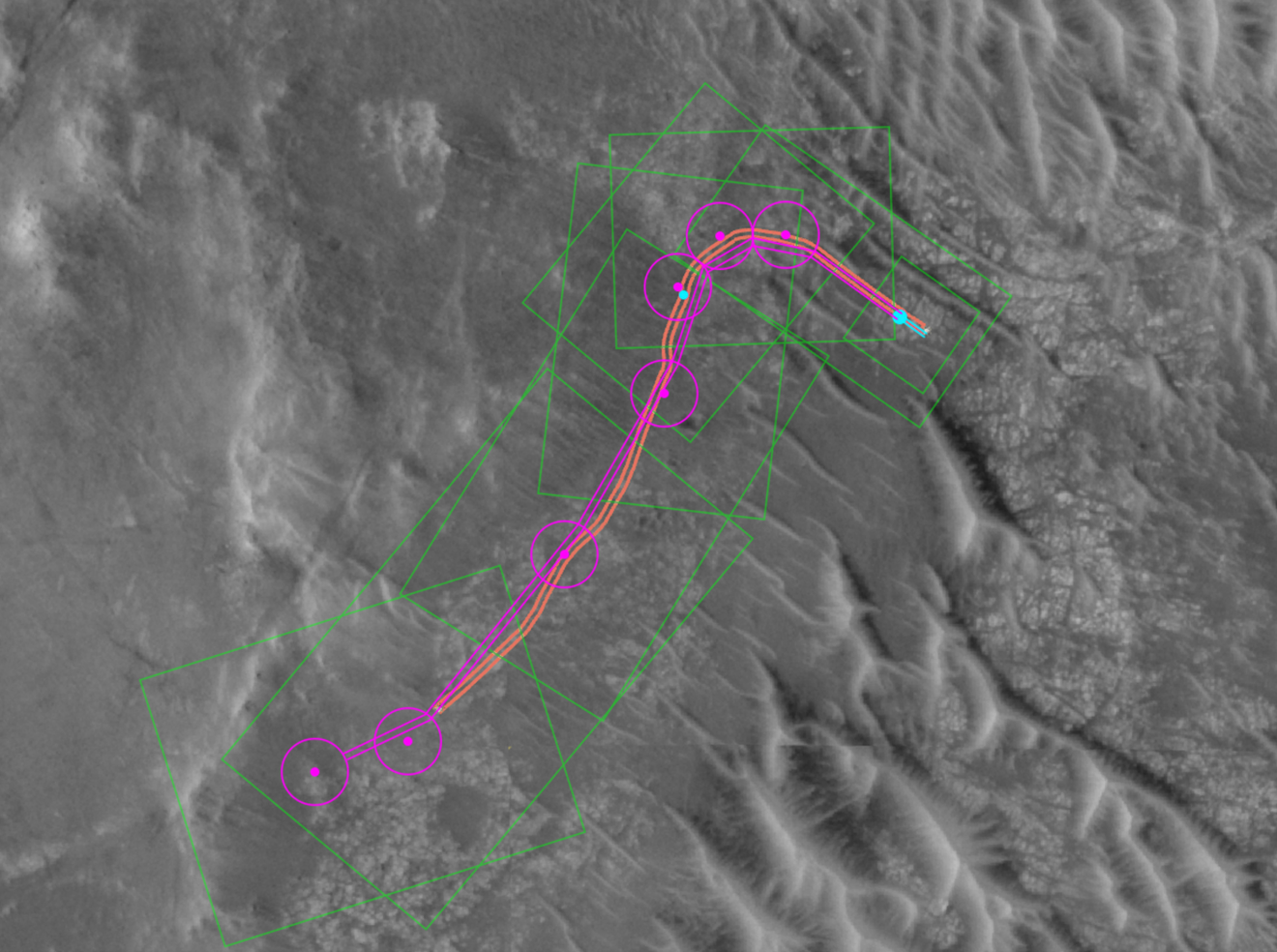

To train the AI, the research team worked with Ann, a participant with complete vocal-tract paralysis. Since she cannot produce audible speech, the system relied on her silent attempts to speak in response to on-screen prompts. These attempted responses triggered measurable patterns in her brain’s motor cortex.

“This gave us a mapping between the chunked windows of neural activity that she generates and the target sentence that she’s trying to say, without her needing to vocalize at any point,” says Kaylo Littlejohn, Ph.D. student and co-author of the study.

With no direct audio reference to train the AI output, the team used synthetic speech tools to simulate what Ann was trying to say.

“We used a pretrained text-to-speech model to generate audio and simulate a target,” says Ph.D. student Cheol Jun Cho. “And we also used Ann’s pre-injury voice, so when we decode the output, it sounds more like her.”

The approach allows the system to preserve aspects of the speaker’s natural voice while compensating for the lack of vocal output. Researchers say the technique could serve as the foundation for future communication tools designed for people who have lost the ability to speak.